AI Hallucinations are Increasing

Plus, AI Governance as the new HR, Le Chat, Insurance for AI, and new Trump licensing regime for chips

Hello from sunny Dublin (yes, you heard that right!). The Trustible team is in Dublin, Ireland, for this year’s IAPP AI Governance Global conference. The conference is full of AI governance practitioners from leading enterprises looking to scale their efforts. Trustible is doing demos and handing out swag. If you’re here, come by our booth!

In today’s edition (5-6 minute read).

AI Hallucinations are Increasing

AI Governance May Become the New HR

European AI Enters Le Chat

The Rise of Insurance for AI

Trump Administration Eyes New Bargain with AI Chips

1. AI Hallucinations are Increasing

OpenAI’s newest reasoning models O3 and O4-mini hallucinate at alarming rates, according to OpenAI’s own benchmarks. On a dataset of questions and publicly available facts (SimpleQA), o3 gave an accurate response 49% of the time and o4-mini 20% of the time. While these numbers look alarmingly high, there are several factors to consider:

Tool Use: These results are based on model outputs without tool use, like web search. An OpenAI blog post from earlier this year showed GPT-4o’s performance on the same benchmark went up from 38% to 90% when a “Web Search agent” was integrated. This capability is integrated by default to ChatGPT and can be easily turned on for API users.

Prompts and Priorities: A recent study suggests that hallucination rates increase significantly, when the model is instructed to be concise or when it has to contradict a claim from the user. In both cases, the model’s priorities around instruction-following and helpfulness outweigh producing truthful outputs (OpenAI recently announced a rollback of GPT-4o over overly sycophantic behavior). These factors suggest that hallucinations can be reduced with a framing that encourages factfulness over other behaviors. O3’s and O4’s increased hallucinations could come from a related trade-off in behavior: reasoning models are rewarded for exploring diverse solutions which could make them less adept at answering simple questions.

Root Causes: While it has been speculated that LLMs hallucinate because they are “next word prediction models” with no grasp of factuality, some recent research suggests that the models do encode some types of knowledge and, in some cases, are aware of when they hallucinate. However, this type of research is still in early stages and does not suggest that hallucinations can be eliminated entirely.

AI Hallucinations have made numerous headlines over the past two years, ranging from Google’s search AI telling users to eat rocks to GPT citing fake cases in legal briefs. In practice, a handful of mitigations can ameliorate many of the potential harms associated with mitigations:

AI Literacy Education: Users need to clearly understand the potential weaknesses of a system and the types of outputs that may need additional checking. Education can be integrated before and during system use.

External Tools: Integrating a LLM with the internet or an internal database (through a RAG pattern) can significantly reduce hallucinations. However, the system is only as good as the external sources (which may contain inaccuracies) and could still hallucinate given the right context.

Prompting Techniques: Certain prompting techniques can reduce hallucination by encouraging factual verification over other behaviors.

Output Fact-Checking: The AI System can be augmented with a guardrail model that detects potential hallucinations in the outputs.

Key Takeaway: As models gain advanced reasoning capabilities, they hallucinate more and public explanations for the phenomena remain speculative. Research into model interpretability is making progress into detecting some hallucinations, it is unlikely that they will be eliminated entirely, so consistent AI Literacy education and system-level protections will be a consistent requirement.

2. AI Governance May Become the New HR

It seems hard to find any recent interview with a Silicon Valley leader where AI agents aren’t a major discussion topic. The general consensus opinion among them is that Agentic AI systems will soon start to affect the employment market as employers either start to replace employees, or simply hire less over time. Some startups have controversially capitalized on that message for publicity, posting advertisements recommending orgs stop hiring humans. We’ll save a discussion on whether AI agents will actually displace jobs at the pace they describe for another time, but instead we want to briefly discuss another idea: If AI agents are the new ‘employees’, then is AI governance the new ‘HR’?

We can look at a few of the key functions that HR typically covers, and how they relate to AI agents:

Hiring - HR teams are typically in charge of hiring activities which includes building role descriptions, identifying appropriate candidates, and overseeing the interview and onboarding process.

AI agents also need to be given clear tasks to do (roles), and different systems may be better suited to certain tasks than others (finding candidates), and then properly integrated with an organization’s systems once selected (onboarding)

Compensation - HR teams help establish what compensation is appropriate for different roles/jobs, and oversee compensation changes based on performance.

AI agents have costs associated with them, and will also have costs associated with their mistakes. AI governance functions will be responsible for ensuring that agentic AI is cost effective for the organization on an ongoing basis.

Regulatory Compliance - HR teams are typically responsible for ensuring certain kinds of employment compliance standards are met, including dealing with labor union issues, enforcing workplace safety laws, and building compliant processes for benefits, compensation, legal issues, etc.

While the regulatory environment for AI, and AI agents especially, is still forming, it’s very likely that there will be various kinds of restrictions on them that need to be reviewed and enforced. For example, many laws may require a ‘human in the loop’ for AI systems and there will be clear guidelines for what that looks like that will have to be enforced.

Performance over time - HR teams often oversee processes for evaluating employee performance, and offer solutions for over and underperforming employees (promotion and termination). How performance is measured, documented, and acted on can be a major challenge for many organizations.

AI agents will also have core performance metrics they are evaluated against, and much like humans, their performance over time may drift. Highly reliable agentic AI systems may get further relied upon or expanded (promoted), while underperforming ones should be cut (terminated). Reviewing performance of AI systems is a key AI governance activity.

Training - HR teams often oversee employee education and training programs to ensure an organization’s workforce is following appropriate policies, and remain qualified for their positions.

AI agents will need regular updates, either in the form of direct model updates, or updated systems they’re integrated with. They may additionally need regular fine-tuning to incorporate new feedback about their own performance.

Key Takeaway: There will likely be a dedicated field of ‘AI Agent Governance’ in the future that will reflect many ways we oversee and govern human workers in a business. Perhaps ironically, the role of overseeing AI systems may be the very last human role to be fully replaced by AI (or the first to be terminated in some extreme circumstances).

3. European AI Enters Le Chat

Last week, France’s leading AI company, Mistral, launched its enterprise version of its ChatGPT competitor product, Le Chat. According to their CEO, the lead up to this launch led the company to triple its revenue in the past 100 days, with most of the interest unsurprisingly coming from European firms. While the international ‘AI Race’ has typically been framed as just the US vs China, the EU market as a whole, it may be too early to count the EU out of the race entirely. In the past, most homegrown European technology companies often struggled to compete with their US counterparts, often leading to acquisition by the better capitalized US companies. For example in the past few weeks alone European based FreeNow and Deliveroo were both acquired by Lyft and DoorDash respectively. However EU leaders have taken notice, and their recently published AI Continent Action Plan set up massive AI investments, and proposed a massive preference for EU built AI.

A number of recent policy shifts in the US may contribute to the development of more EU-centric AI. This includes reduced scientific funding, tighter immigration policies, and aggressive tariff policies that have aggravated even close allies, and may spread beyond manufactured goods and onto other kinds of artistic goods that Europe also produces such as movies. The US tech sector has long benefited from ‘brain draining’ the world of its top talent, with 60% of US based AI ‘Unicorns’ having at least 1 immigrant founder (including Elon Must). The confluence of these policies, coupled with some simplified digital regulations which the EU seems very open to, may ironically lead to an energized EU tech sector that will compete with American, and Chinese tech companies on a global scale at a time when the majority of the US’s economic growth has come from its technology services sector.

Our Take: Many Silicon Valley AI leaders have the Trump Administration’s positions on crypto and AI regulations, which may help them in the short term. However, other policies have shifted the world’s attitude towards the US which may be more harmful to US AI adoption in the long term, and may ironically contribute to fueling a new competitive EU tech sector.

4. The Rise of Insurance for AI

As more organizations integrate AI into their services and products, they also expose themselves to new legal risks. Much has been said about the risks stemming from AI, such as algorithmic bias, hallucination, and intellectual property infringements. However, where liability is allocated within the AI ecosystem when these risks are realized has yet to be adequately addressed. Laws like the EU AI Act and Colorado’s SB 205 deal with organizational AI governance, but are virtually silent on how liability is determined when harm occurs from AI systems. A novel type of insurance coverage may forge a new bond between AI governance and AI liability.

Companies like Vouch and Armilla (who has partnered with established global insurer Lloyds) are venturing into the world of AI liability insurance. The premise is simple: when something goes wrong with an AI product or service, these providers will help cover the costs. Insurance for technology specific issues is not new, as cybersecurity insurance is essentially required for any modern business. However, the terms of coverage may be a catalyst for organizations to rapidly adopt AI governance structures. For instance, Armilla offerings include performance assurances, as well as compliance and fairness checks. Similar to how cybersecurity insurance typically requires basic security controls for coverage, as more providers venture into AI coverage we will likely see baseline AI governance requirements for coverage.

Our Take: Insurance coverage for AI mishaps is not a substitute for AI governance, nor does it address broader AI liability questions. However, it does help bridge the gap between AI governance and the financial liabilities that come with AI.

5. Trump Administration Eyes New Bargain with AI Chips

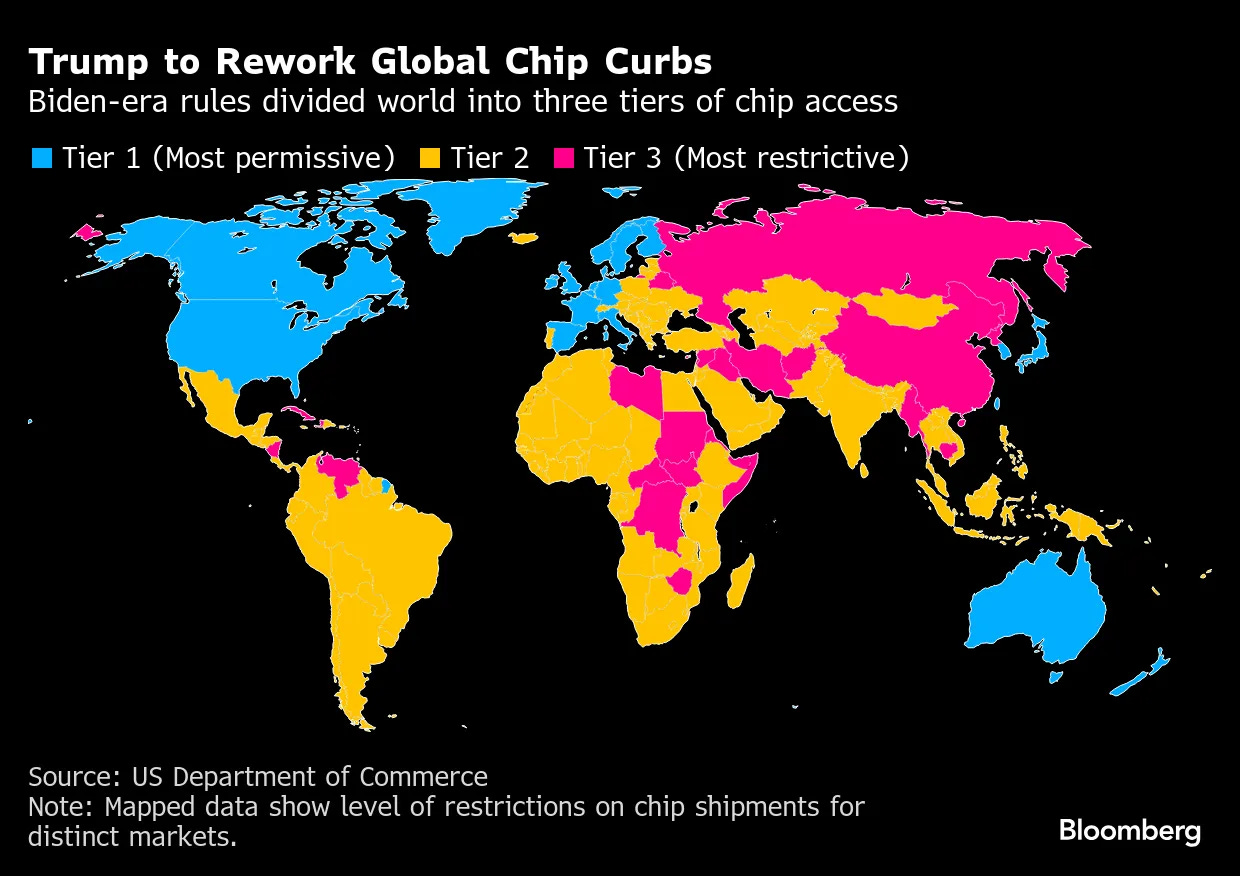

The Trump Administration continues undoing Biden era technology policy, this time as it relates to AI chip exports. Prior to leaving office in January 2025, the Biden Administration issued the Framework for Artificial Intelligence Diffusion that would limit access to AI chips and technologies by China and other adversarial countries. The rule implemented a three-tiered licensing regime for AI chips:

Tier 1 countries were those subject to U.S. arms embargoes (e.g., China and Russia) and would require a license to import chips pending an application review that was presumptively denied.

Tier 2 countries were U.S. allies, which allowed them license exemptions.

Tier 3 countries were all other nations, which would need licenses to import a capped number of chips pending an application review that was presumptively approved.

According to Trump Administration officials, the Biden era rule was “overly bureaucratic, and would stymie American innovation.” While the revamped rule is not public, it appears that the Trump Administration may scrap the tiered countries approach in favor of licensing agreements on a country-by-country basis.

Our Take: There are clear national security interests driving the need to restrict who can access advanced AI chips. However, a more decentralized licensing regime also plays well into the Trump Administration's protectionist trade policy and gives the Administration further leverage for favorable trade deals.

—

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team