AI models, committees, reliance, and safety

Deep dive into 4 current themes influencing our work

Hello! The world of AI is changing fast. In today’s edition, we cover some practical AI governance advice – such as the implications of importing your organization’s AI models to your IT stack or how AI Governance Committees can operate more effectively – and other higher-level topics such as the balancing act between under-and-overreliance of AI and the changing landscape of AI safety worldwide.

In today’s edition (6 minute read):

BYOM – Bring Your Own Model

Enhancing the Effectiveness of AI Governance Committees

Balancing Overreliance and Underreliance of AI

Global Leaders Leave AI Safety Behind

1. BYOM - Bring your Own Model

Generative AI is slowly but surely getting integrated into most technology and software solutions today. From our own observations, some of those integrations have proven to be very powerful and useful (such as Github Co-pilot), while others we have struggled to find effective uses for (such as GenAI in Jira). Even with the price of GenAI falling rapidly, many software providers have used the inclusion of GenAI to increase the price per user or tie the AI features directly to a per-user premium. This calculus makes sense assuming each user is heavily relying on these AI tools on a regular basis and they yield significant business value. However that’s rarely the case as different personas use different sets of tools, vary widely in their AI literacy, and have different tasks they may need to achieve. This creates a massive cost management challenge for organizations where they may be paying premiums for AI offerings they aren’t using, or only need to use on occasion. What may be especially frustrating is that many of the basic capabilities of LLMs have now been largely commoditized across models, making the AI features they power undifferentiated as well.

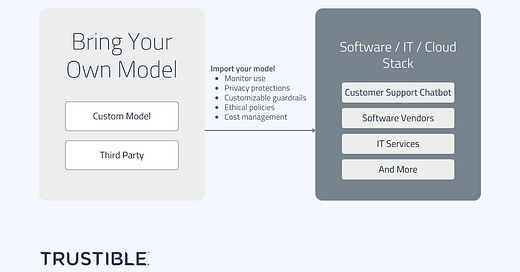

In response to this growing organizational inefficiency, we see a world in which ‘bringing your own model’ may be the preferred option, especially by large enterprises. An organization could stand up their own deployment of models in the cloud, and handle the allocation of compute to their own employees to use as they see fit. These self-hosted models can then be integrated, via API, to the suite of software tools already being used by the organization. If those software tools were using the same, or very similar model, there should not be a large risk for performance differences. For AI enabled organizations, this ‘bring your own model’ (BYOM) approach has many potential benefits, including the ability to efficiently monitor use, improved privacy protections, customized guardrails or ethical policies, and better cost management. There may be some initial pushback by software vendors who consider their prompts as proprietary, who leverage fine-tuned models, or see reselling AI as a key driver of growth. Despite this, the governance needs of large enterprises may outweigh those concerns; there’s an analogy here to how large enterprises may still require ‘on-prem’ software deployments in the cloud age, with similar issues at stake around access, cost management, and security.

Key Takeaways: As LLMs have become increasingly commoditized, there isn’t a strict need for a software vendor to provide an AI model to power application features. The governance advantages of a ‘BYOM’ world may prevail over the preferences of vendors.

2. Enhancing the Effectiveness of AI Governance Committees

Many organizations have created cross-functional AI governance committees responsible for strategy, governance, and oversight of their AI systems. But any sort of committee comes with challenges of its own, including how to build accountability, get buy-in for the committee's efforts, and ensure that everyone is equally informed.

Trustible recently did a deep dive into best practices for AI Governance committees, and how our platform can help accelerate, standardize, and scale their efforts. In it, we discuss the top priorities of AI governance committees, why they must act now, and how the features and capabilities of the Trustible platform fit into their operational and strategic priorities.

Download our published white paper here.

3. Balancing Overreliance and Underreliance of AI

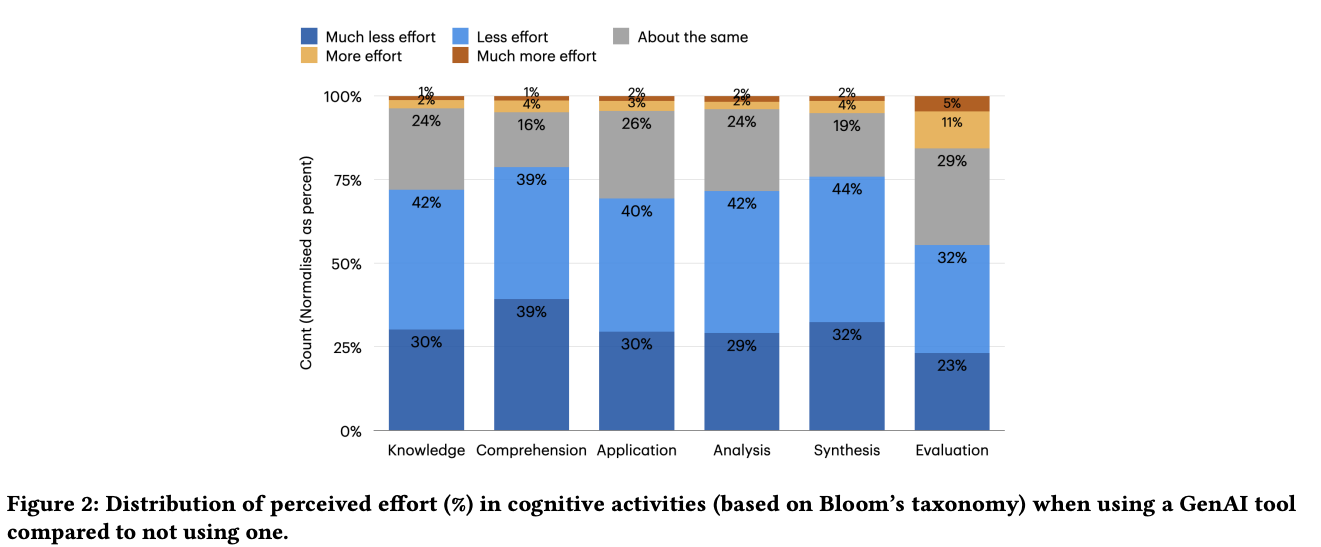

A recent large-scale survey by Microsoft and Carnegie Mellon University of 319 professionals provides new insights into “automation bias,” which is the tendency to uncritically accept AI-generated outputs. The study reveals how overreliance on AI tools may be dulling our critical thinking skills by often bypassing essential validation steps, especially for routine tasks. Such overreliance on AI can lead to many real-world harms, like incorporating outdated or hallucinated information into reports or using insecure or faulty code.

Overreliance is not a new phenomenon and has been studied for many years in the context of decision making systems, especially in high-risk areas like medicine. However, Generative AI introduces new challenges because of its widespread availability and of how it’s shifting how many approach “critical thinking” tasks. For example, many of the study’s participants described moving from directly “doing” the task to playing more of a supervisory role—where they verify and integrate AI outputs, rather than create everything from scratch. Conversely, some participants perceived greater effort in some tasks, particularly when evaluating and applying AI responses. This can lead to an underreliance in AI, where the strengths of an AI system are not fully exploited.

The ideal symbiotic state is of “appropriate reliance,” where users are empowered by the AI system, but clearly understand its limits. For instance, using ChatGPT to summarize a document, but dedicating time for reviewing the output to verify its accuracy. There are a few key mitigations that can help reduce overreliance that include:

User Education. Individuals can learn the limitations of AI Systems. For example, the study found that participants engaged critical thinking skills when they were aware of potential negative impacts. [Training may need to be separated by level of AI Literacy.]

UI Design. AI systems can be designed to encourage critical thinking. This can be approached in a “proactive” manner, where the system interrupts the user to highlight the need and opportunity for critical thinking, or a “reactive” one where the user can explicitly request assistance when it is consciously needed. In addition, seamful design techniques can encourage the user to pause and actively engage with the AI System.

System/Process Protections. Implementing procedures at the organization or system level to broadly reduce overreliance harms. For example, generated code may be required to undergo security scanning and manual review.

Key Takeaways: Overreliance is a growing risk of AI use, with this recent survey showing that many trust Generative AI outputs without engaging in critical thinking processes. User education and system designs can mitigate some of the harms associated with this trend. On the other hand, tackling underreliance remains an open challenge: While increased AI literacy lowers overreliance, studies show that it can lead to lower AI receptivity and increases the perceived effort of “supervising” AI systems.

2. Global Leaders Leave AI Safety Behind

Vice President JD Vance arrived at the Paris AI Action Summit and declared “I'm not here this morning to talk about AI safety … I'm here to talk about AI opportunity.” His remarks signal a fundamental shift in how governments are thinking about AI. The U.S. is the most notable mover, as President Trump lays out an AI agenda that dismantles “barriers to American AI innovation.” This began with repealing President Biden’s AI Safety Executive Order but has started to permeate elsewhere across the Federal Government.

One clear example of this shift regards the NIST AI Safety Institute (AISI). Since President Trump repealed the AI Safety Executive Order, many speculated on the fate of AISI. Axios reported that NIST staff expects a forthcoming round of layoffs that would leave AISI “gutted.” AISI was already struggling after the resignation of its Director and staffers being excluded from the U.S. delegation to the Paris AI Action Summit. The impending layoffs put the final nail in the coffin for AI safety priorities from the U.S. Executive Branch.

However, the pivot away from AI safety is not isolated to the U.S. Our previous newsletter explored the rise of the EU First AI Agenda and its timing is far from serendipitous. France, Germany, and Italy have voiced concerns about restrictive AI regulations (i.e., the EU AI Act). The desire to realign with the new AI innovation ethos was on full display at the Paris AI Action Summit, which focused on AI innovation and opportunities. This stands in contrast to the UK’s 2023 summit, entitled “AI Safety Summit 2023.” Ironically, less than two years after their safety summit, the UK government rebranded its AI Safety Institute as the "Security Institute” in a move seen as aligning with the Trump Administration's AI agenda. Moreover, the EU is running into additional roadblocks with its AI regulatory agenda, as it appears that the third draft of the General Purpose AI’s Code of Practice will be delayed by at least a month. The rationale for the delay is for additional time to incorporate stakeholder feedback, but it may also signify a shift towards diluting some guidelines in favor of technology companies.

Our Take: It is not doomsday for AI safety. There are many actors within the AI ecosystem that have a vested interest in demonstrating that their tools are developed responsibly and with safety in mind. However, policymakers around the world are becoming reluctant cheerleaders for those safety priorities. We do not expect this trend to change, especially as India prepares to host the next global AI summit.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team