AI Regulations Prioritizing Speed Over Input

Plus, what is an AI guardrail, algorithmic monoculture, and AI risks & mitigations taxonomy.

Hi there! Wishing all of our US-readers a good Labor Day weekend. In today’s edition:

AI Regulations Prioritizing Speed Over Input

Trustible Launches AI Risk & Mitigations Taxonomy

Defining AI Guardrails

Algorithmic Monoculture Could Make Markets and Society Less Diverse

1. AI Regulations Prioritizing Speed Over Input

This year is set to be a banner year for AI regulations both internationally and domestically. In Europe, the EU AI Act entered into force on August 1, 2024, which started the clock on enforceable obligations. Back in the United States, Colorado passed the first comprehensive state AI law (SB 205) that imposes rules for certain high risk AI systems. Meanwhile, California lawmakers are grappling with approximately 30 AI-related bills before the end of the legislative session on August 31, with SB 1047 gaining the most attention. However, flaws in the forthcoming AI regulations raise questions over whether the need for speed outweighed thoughtful input from stakeholders.

The EU AI Act’s first set of obligations for prohibited systems is set to come into effect in February 2025. Yet, complications with implementing those rules may push actual enforcement into summer 2025. There have also been concerns over the timeliness of the EU’s ability to draft codes of practice for General Purpose AI providers. In the U.S., when Colorado Governor Polis signed SB 205, he did so with reservations over some of the bill's provisions. Since being signed into law, the state’s lawmakers announced that they will amend parts of the new law during the 2025 legislative session. In California, lawmakers have rushed to amend SB 1047 after industry voiced concerns about provisions related to liability and the creation of an AI-specific state agency.

Our Take: Enacting AI laws require a balance between speed and thoughtful input. While the process for the EU AI Act began in April 2021, the complex regime may have benefited from additional external stakeholder input on topics like practical implementation timelines and clarifications over covered entities (e.g., deployers and providers). Likewise, the speed to pass bills like SB 205 and SB 1047 demonstrate the perils of a more deliberate stakeholder engagement process. While there is still time to address deficiencies in these various regulations, allowing for thoughtful stakeholder input initially can avoid some of the implementation pitfalls that arise once these bills become law.

2. Trustible Launches AI Risk & Mitigations Taxonomy

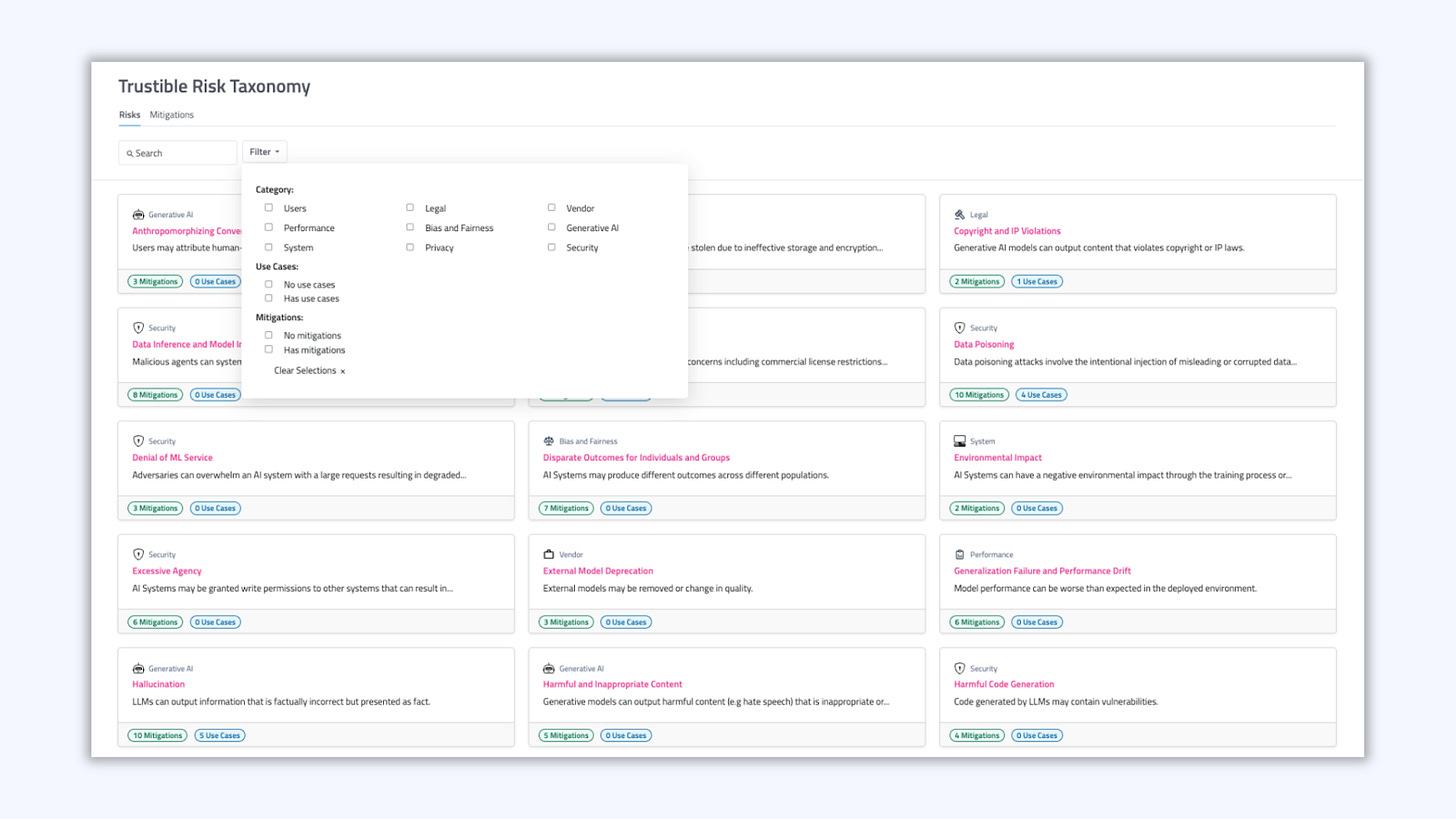

We’re excited to announce our new Risk & Mitigation Taxonomy – a detailed library and guidance of risks, harms, and mitigations inherent to enterprise AI.

This launch includes:

44 AI Risks - These are AI-specific risk events tied to AI use cases. We have in-depth description of each risk, links to published research about each of these, and guidance for how to measure the likelihood and severity of each risk.

68 Risk Mitigations - Each mitigation has a full description, suggested tools and audit evidence, and is linked to the risks that they reasonably mitigate.

Moreover, recommendation models throughout our platform recommend where and when these risks & mitigations may be relevant to your use cases.

This is a continuation of how we’re incorporating our team’s expertise in AI/ML and policy & regulations into building a turnkey AI governance platform that has the latest insight & research on AI safety.

To learn more, click here.

3. Defining AI Guardrails

The recently released Grok-2 model from xAI created controversy due to its ability to generate images of real people, violence, and illegal activity. Such abilities are in contrast to many other major generative models (e.g. Dall-E and Gemini) that refuse to generate similar content. The difference between these models is in the former’s lack of Guardrails: the set of rules and safety mechanisms designed to ensure that models operate within ethical and safe boundaries. There is no one official set of guardrails; different providers will incorporate a different set of strategies. Common mechanisms include:

Model Training for Safety: After pre-training (when the model learns basic content generation abilities), safety tuning processes (e.g. RLHF or Constitutional AI) can be used to teach the model to favor safe outputs and refuse to answer certain questions. This guardrail is incorporated into many models; however, prompt hacking techniques have been shown to override these controls. Furthermore, even a small amount of fine-tuning by a user has been shown to undo these protections.

Input and Output Checks: Integrating checks on input prompts and model outputs provides another layer of protection. These checks are likely already integrated with closed-source models and can be added to open-source ones using content moderation tools and specialized libraries which include checks for hallucinations and attempted prompt-injections.

System Design: Beyond moderating a specific interaction, AI Systems as a whole can be designed to mitigate unwanted behavior. For example, the GPT-4o fine-tuning system may prevent certain training data from being used to avoid destabilizing behavior. Hallucination protections may require a model to link specific sources, and websites can provide warnings about potential sensitive content.

The challenge with built-in guardrails is that they assume a universal definition of the “ethical and safe” boundaries that they enforce. Such a definition does not exist due to varying cultural norms and system contexts (e.g. an application for students vs an artist creating thought-provoking art). Even content moderation systems, which can be used as an output guardrail, have been shown to contain biases against certain populations. Some model providers will publish the norms integrated into their systems (e.g. Open AI’s Model Spec or Anthropic’s Constitution), but these provide limited insight into the actual guardrails inside these systems.

Key Takeaway: Guardrails are a broad term for controls on AI systems that ensure safe operations. While models and solutions with built-in guardrails may provide a reasonable solution for many use cases, unrestricted models like Grok can give users more transparency and control over their systems.

4. Algorithmic Monoculture Could Make Markets and Society Less Diverse

While a lot of the focus on AI is on frontier models or ‘high risk’ use cases, some of the most substantial harm may actually come from simpler, smaller AI models used at scale. In particular, when a mass group is equipped with the same data and the same algorithm, even small, normally trivial characteristics can have an outsized impact. Last week, the DOJ joined several State’s antitrust investigations and lawsuits into the real estate rental pricing platform RealPage. The suits accuse RealPage of pushing up rent prices nationwide by allowing landlords across entire markets to indirectly collude via a third party algorithm to push prices up. While this isn’t leveraging AI per se, the use of near real-time data intelligence and suggested pricing foreshadows how AI can be used to negatively impact society when deployed at scale.

When specific algorithms reach a certain level of market penetration, their potential harms become more than the sum of the potential harm to individuals. For example, an algorithm to suggest new music to listen to based on your preference may be considered fairly low risk. However if a single algorithm has a massive market share, and then has a bias or flaw against a specific type of music, it could disadvantage entire genres of music and cause tangible harm to aspiring artists. Academics have coined the term ‘algorithmic monoculture’ to describe this phenomenon. The idea that our society could become less diverse and small algorithmic flaws or changes could have snowballing effects on society as a result of everyone using the same algorithms. We can imagine the harmful impacts if entire market sectors followed the same advice, if every company used the same resume filtering algorithm, or everyone got the same news feeds. One natural safeguard against this is to ensure that there is extremely healthy competition between AI models, and other algorithms, or that we start creating algorithmic diversity within platforms as well, but the mounting costs of creating new AI systems could create a serious barrier to this.

Key Takeaway: Seemingly low-risk AI use can cause harm if it reaches a huge scale. This is often because the set of things not being recommended, generated, or collected may come from a specific population. The pushback against “algorithmic monoculture” is challenged by potential regulatory lock-in, and the rising costs of training new, competitive AI models.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team