Closed vs open source will define 2024's AI debates

Plus: Google Gemini's ethics, Air Canada's legal headaches, and new state-level AI executive orders

Hello! So much is happening in the world of AI and AI governance that it’s hard to keep up. Hopefully you’re finding some time to stay sane! Today’s read: 7 minutes.

The discussion around Open vs Closed AI

Gemini and the AI ethics conundrum

Air Canada’s Chatbot and its new legal risks

An analysis of state level executive orders

Introducing a new kind of risk: AI worms

ICYMI – Here’s the recording of our LinkedIn Live featuring Nationwide, Prudential, and Guardian titled ‘Operationalizing AI governance in insurance.’

–

1. Open vs Closed AI

One of the fiercest debates in the AI world is over the benefits vs risks of open source AI. While Elon Musk’s lawsuit against OpenAI for pivoting their mission away from open source AI, and Mistral’s deal with Microsoft for a potentially closed-source model captured many headlines last week, the real policy debate over the issue is happening elsewhere.

Per President Biden’s Executive Order, the National Telecommunications and Information Administrate (NTIA) recently opened up their comment period about the trade-offs and ‘dual use potential’ of foundational models. The main question at hand: do the safety, research, and innovation benefits of open source AI outweigh the potential risks of misuse, misinformation, or criminal enablement?

Within the private sector, several companies including IBM, Meta, and HuggingFace have created the AI Alliance to promote open AI development. Meanwhile, Microsoft, OpenAI, Google, and Anthropic have created the Frontier Model Foundation to advocate for the interests of closed-source developers. Within academia, there are similar divides. A recent paper from Stanford’s Center for Research for Foundational Models argues that the evidence for additional marginal risk from foundational models is low, mainly because creating harmful content was never as costly as spreading it. They argue that many of the proposed policy options, such as model development licensing, wouldn’t meaningfully mitigate many of the risks but would severely hurt smaller developers and safety research. In contrast, a 2023 paper from the UK based Centre for the Governance of AI concluded that sufficiently advanced and capable models were too risky to be immediately open sourced. This thinking is reflected in the EU AI Act’s ‘systemic risk’ general purpose AI requirements.

Our Take: Governance of open source AI isn’t just a national issue – it has significant international implications and the debate about it will likely continue for a long time. While researchers are right that access to open source AI assists with research and innovation, it also complicates regulatory enforcement in a lot of ways. Some potentially desirable policy options like watermarking, ethical data collection, or restricting harmful uses become difficult to enforce if anyone is able to freely download, and modify, a highly capable system. This will be the discussion to watch in 2024.

2. Threading the AI Ethics Needle

Google suffered massive backlash last week after releasing the text-to-image generation capabilities of Gemini, Google’s latest flagship generative AI model. Gemini was generating images of historical events and figures with more diversity than existed at the time such as racially diverse Nazis. The deliberate racial diversity built into the system was in direct response to previous criticisms of many image generation tools of not generating sufficiently diverse images; for example, many first generation systems when asked to generate images of a ‘CEO’ would show a middle-aged white man.

Google’s systems are not unique in their bias, but their massive scale and past history of AI bias, likely exacerbated the backlash. Naturally, the incident got quickly enwrapped in ‘culture war’ discussions with some accusing Google of being too ‘woke’ or revisionist and criticized ‘AI Ethics.’ While many AI ethicists have argued that it was the lack of proper ethical considerations and a heavily rushed timeline that led to this. The debate over what constitutes ‘AI bias’ is likely going to continue as more powerful systems emerge and they get integrated into regular use.

Our Take: There probably isn’t a way to fully avoid any sort of generative AI backlash. At best, It seems like many people want generated images to depict the past accurately, but generate the diverse present or future we want to see. It’s unclear how to teach AI models this nuance, and the debate over what future we want to see is a heavily politicized issue that will be contentious. Read our white paper outlining a simple, actionable framework for evaluating ethical decisions for AI systems.

3. When Chatting is Hurting: How Air Canada’s Chatbot Went Awry

On February 14, 2024, Air Canada was ordered to pay Jake Moffatt $812.02 after the airline’s chatbot provided Moffatt with incorrect information about its bereavement policy. In November 2022, Moffatt visited Air Canada’s website to book a ticket from Vancouver to Toronto for a funeral. While visiting the site, he interacted with Air Canada’s experimental chatbot and inquired about the company’s bereavement policy. The chatbot informed Moffatt that he could seek a reimbursement within 90 days of the ticket date by completing a refund application form. Unbeknownst to Moffatt, Air Canada’s policy did not give retroactive refunds for bereavement travel. The airline contended that, had Moffat visited the part of the website with the actual bereavement policy, he would have known that the chatbot provided him with inaccurate information.

As part of its defense, Air Canada attempted to argue that it was not responsible for the chatbot’s actions - essentially claiming that the chatbot was a “separate legal entity that is responsible for its own actions.” Subsequent reporting determined it was the first time a Canadian company claimed it could not be held responsible for its chatbot’s actions. The court was unpersuaded by this assertion, characterizing it as “remarkable.” Instead, the court found that it should have been obvious to Air Canada that it would be responsible for information on its website, whether it “comes from a static page or a chatbot.”

Our Take: Chatbots are typically seen as low-risk AI use cases that can help businesses lower costs and improve the customer experience. However, companies should be on notice about their potential liability when their chatbots do not perform as intended. The Air Canada saga serves as yet another example of the types of harm that can be caused by chatbots and the potential litigation that can ensue as a result. Moreover, companies should consider how their oversight processes can ensure customer-facing AI use cases, like chatbots, rely on up-to-date inputs and produce accurate outputs.

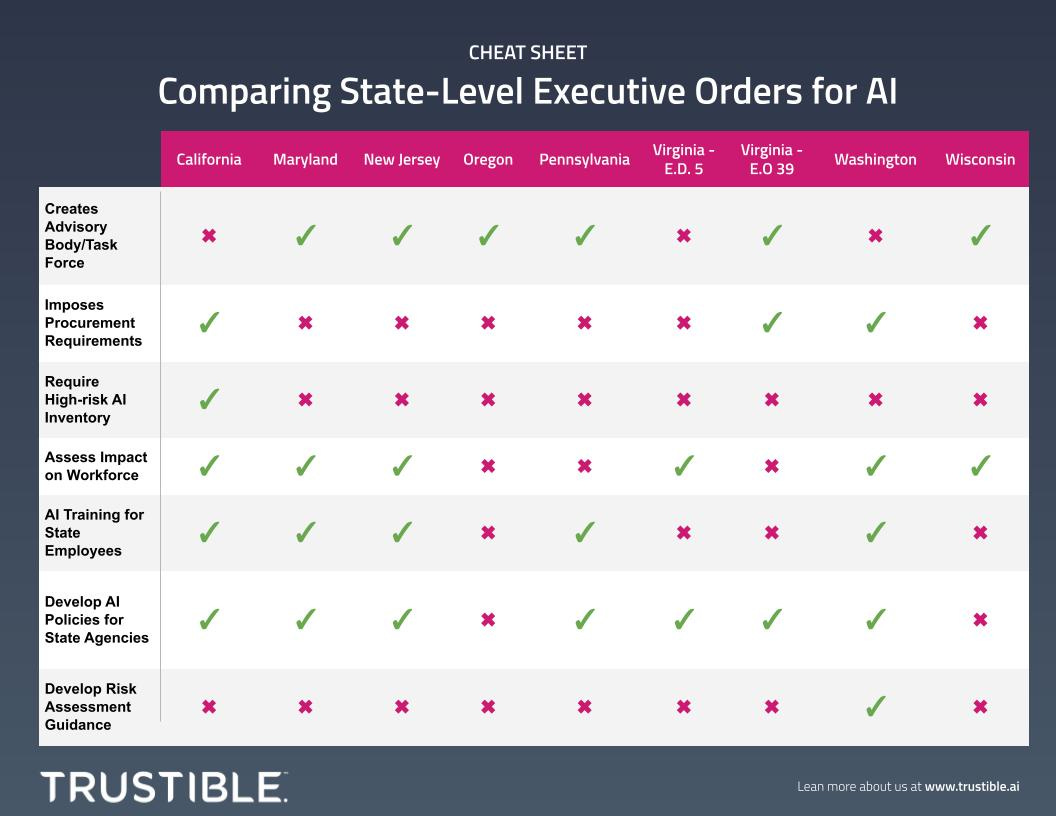

4. States Introduce Executive Orders for AI

State legislatures are not alone in taking action on AI. Over the past year, eight Governors have signed Executive Orders designed to gain insights on various aspects of how AI is deployed, procured, and used by state agencies. In our latest blog post, we explore the scope of these executive actions, as well as their recurring themes, shortcomings, benefits, and implications for businesses.

Key Takeaways: Many States are looking to ensure that AI is used responsible within State agencies.

5. A New Type of Risk: AI Worms

According to a recent report by AI security experts and an associated Wired article, setting up a ‘Retrieval Augmented Generation’ (RAG) could introduce new kinds of exploits as risks, including introducing an ‘AI Worm’. Researchers demonstrated how they could introduce prompt injections into emails that were inserted into a vector database, accessed by an LLM, and then generated undesirable content. In addition, they demonstrated in a simulated environment how to get one LLM to generate their malicious instructions and pass it to another service also leveraging an LLM. This self-replication is one of the key characteristics of a computer worm and opens new risks, especially for any AI agent that has the ability to call APIs. While detecting and mitigating possible ‘injection’ attacks isn’t new in the security field, the nature of natural language prompts could make this task more difficult. Ironically the one tool that might be the most effective at detecting these kinds of exploits is a large language model itself.

Key Takeaways: While RAG patterns can significantly reduce possible LLM hallucinations, they could introduce new potential vulnerabilities. The entire field of AI security is new and we expect to see an arms race between AI powered security attacks, and AI powered security defenses.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team