EU AI Act has a final text, Davos loves AI trust, and states regulate AI

Plus, will we soon enter a data poisoning arms race?

Welcome! Lots to cover this week. In today’s edition (6 minute read):

The final text of the EU AI Act has been leaked

AI trust leads the conversation in Davos & CES

Gartner report shows AI governance can lead to increased revenue

Data poisoning gets easier

A state-by-state look at AI regulations in 2023

Don’t forget to register to our Linkedin Live next Wednesday January 31 discussing the FTC’s approach to regulating AI with former FTC Chair Jon Leibowitz and Wilson Sonsini partner Maneesha Mithal.

1. The final text of the EU AI Act has been leaked

It’s only a matter of weeks before the EU AI Act gets enacted.

The final text of the EU AI Act was leaked on Monday Jan 22 by journalist Luca Bertuzzi from Euractiv, followed by an additional form released later that day by an EU Parliament staffer. This gives the public the first glimpse of the final text reflecting the political agreements made during the final trialogue session in December 2023. The EU Parliament is rushing to officially vote and adopt the text by Feb 2, although there are rumors of efforts by France to amend the final text before then. Here are a few key insights from the final text relevant to organizations developing or deploying AI:

AI systems that don't seriously harm people's health, safety, or rights, or greatly influence decisions, are not considered ‘high-risk’.

High-risk AI systems need impact assessments, including their purpose, who they affect, possible harms, and human oversight.

The new draft focuses on general AI models. Providers must tell the European Commission if their model fits the criteria for general AI models.

Our Take: The last minute efforts by France are unlikely to succeed, and mainly represent the interest of the French government in promoting their own local AI ecosystem. As a result, the final text published will likely be officially passed and begin enforcement partially in 2025. The rule will have implications for companies around the world, including in the US.

2. AI trust leads the conversation in Davos & CES

AI was the center of attention at both the Consumer Electronics Show (CES) in Las Vegas and World Economic Forum Conference in Davos. While 2023 may have been the year of talking about prototyping AI uses, it’s clear that 2024 will be the year for the first at-scale commercial applications leveraging the latest AI models. One underlying theme across the innovation and risk conversations: trust.

A few highlights related to AI governance.

CES introduced dozens of new AI-enabled products, from AI snowblowers and baby monitors, to ChatGPT in cars and AI laptop keys. Despite the excitement for consumer products, many other industries (like healthcare and financial services) admit that AI development and adoption will "move at the speed of trust."

OpenAI CEO Sam Altman told Axios in Davos that he sees “uncomfortable choices” for model developers because AI will give different answers for different users based on their values, preferences, and possibly on what country they reside in.

Microsoft CEO Satya Nadella said he sees global consensus for AI regulation, despite how the tech might differ from one jurisdiction to another.

PwC surveyed 4,700 CEOs prior to Davos. The survey revealed that while 25% anticipate job reductions this year due to generative AI, a substantial 75% foresee major industry shifts within three years. Nearly half (45%) believe their companies would not survive the next decade without substantial changes to how they do business because of AI.

Why it matters: these events represent two of the most important global meeting points for leaders in technology, business, and politics. Global alignment on AI adoption and safety is top of the agenda.

3. Key Stat: Responsible AI = Better AI

According to Gartner, organizations that have implemented AI Governance frameworks have seen more successful AI initiatives, improved customer experience, and increased revenue.

Our take: AI governance is often perceived as a decelerator of AI adoption. Perhaps because it requires thoughtful assessment of a system prior to deployment and even throughout its lifecycle. However, this Gartner report (and others like it) shows that AI governance can help generate and protect revenue because it leads to better AI products that are more trusted by users. Responsible AI = Better AI = $$

4. Nightshade - The Creators Strike Back

After a previous announcement last year, researchers at the University of Chicago released Nightshade, a tool to deliberately ‘poison’ images being used for model training. The intended use is for visual artists and creators to protect their content from being used for model training without their permission. While this seems like a big ‘win’ for content creators, there are also potential dual use concerns, and will likely set off a data poisoning ‘arms race’.

Dual use challenge: many platforms have to heavily rely on automated content filters to identify illegal, inappropriate, or offensive content. The very technology used in Nightshade to skew how an AI interprets an image could potentially be used to evade these content filters.

Data poisoning arms race: before tools like Nightshade became available, targeted 'data poisoning attacks'—intentionally releasing content to influence models trained on that data—were uncommon and typically state-sponsored. Tools like Nightshade will force companies to filter and process their training data more carefully, and will likely lead to attempts to reverse data poisoning. This can easily result in a costly cycle of escalating data poisoning attempts and more sophisticated detection systems.

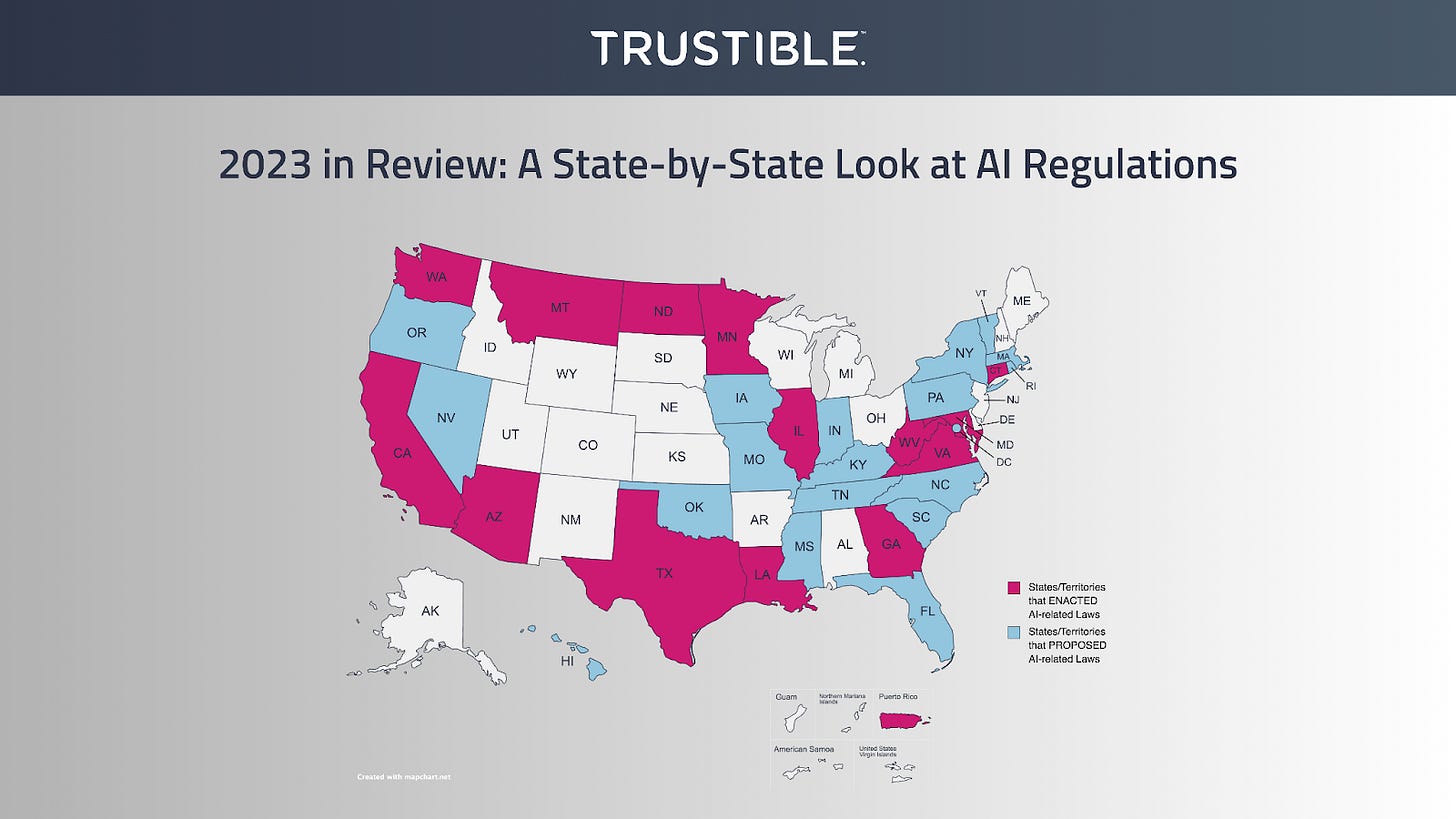

5. 2023 in Review: A State-by-State Look at AI Regulations

In the US, states continue to introduce and enact regulations at an accelerated pace. Here's a snapshot of the key developments in AI regulation across various states in 2023:

🏛 Nearly 200 AI-related bills were considered across 33 states, including Puerto Rico and Washington D.C. Most of these focused on sector-specific AI applications.

❗ A major theme in these bills was the regulation of AI in high-risk scenarios, with a focus on preventing discrimination and ensuring transparency in AI decisions.

🤖 States targeted AI use across key sectors, including government (Connecticut, California), employment (Illinois, New York City), healthcare (California, New Jersey), and insurance (Colorado, Rhode Island), each addressing unique challenges and aims such as decision-making transparency, non-discrimination, and ethical use.

As we look ahead, expect states to remain at the forefront of AI regulation – increasing the compliance complexity for many organizations.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team