How do you measure the benefits of AI?

Plus: Moderna’s OpenAI case study, new models from Microsoft and Snowflake, and Connecticut AI bill gets closer to adoption

Hi there! Lots of substantive updates on AI adoption, new models, AI laws, and risk techniques in today’s Trustible Newsletter edition. Let’s get to it (8 minute read)

Measuring the Benefits of AI

Moderna’s New Case Study with OpenAI

New Transparency Ratings for Microsoft’s Phi-3 and Snowflake’s Arctic

Connecticut Moves Closer to Comprehensive AI Law

NIST Releases Generative AI Risk Profile and GenAI Testing Tools

1. A Framework for Measuring the Benefits of AI

As the AI era goes from fantasy to reality, many organizations are taking a hard look at where they want to operationally deploy the new technology – in particular Generative AI. For many organizations, the actual costs and risks for GenAI are becoming clear, but deciding where and how to deploy it requires precise measurements of the benefits of the system as well. Many businesses will talk in general terms of ‘productivity’ or ‘efficiency’, but for highly data driven organizations, it has become essential to scientifically define, measure, and evaluate the actual return on investment for their GenAI capabilities. In addition to measuring ROI, upcoming regulations and industry standards like ISO 42001 expect organizations to conduct impact assessments which require identifying relevant impacted stakeholders, and measuring exactly how they may benefit, or be harmed from an AI system. Understanding whether the benefits outweigh the risks is the bedrock of ethical AI.

To assist organizations with measuring the benefits of AI, Trustible has developed a framework for measuring the benefits of AI. This framework outlines stakeholder types, benefit categories, and a taxonomy of benefits and ways of measuring them. The benefits taxonomy is integrated with the Trustible platform and helps organizations analyze the benefits, risks, and costs associated with any AI use case.

Key Takeaway: Organizations need to be specific about the intended, or actual benefits of AI to ensure they are deploying it in an ethical, and responsible way.

2. Moderna’s Case Study with OpenAI

OpenAI recently released a case study with Moderna highlighting how the pharmaceutical and biotechnology company is leveraging OpenAI tools across its enterprise. CEO Stéphane Bancel says the productivity of thousands of people has significantly increased: “A team of a few thousand can perform like a team of 100,000.”

Moderna’s objective was to achieve 100% adoption and proficiency of generative AI by all its people with access to digital solutions in six months. Here are some of the outcomes (thanks to Allie Miller for this concise summary):

750 custom GPTs built across the company within 2 months

40% of weekly active users created their own GPTs

Each user has 120 ChatGPT conversations per week on average (that’s 3 per hour!)

Some GPTs they've built:

💊 Dose ID - analyzes clinical trial data to optimize vaccine dose selection

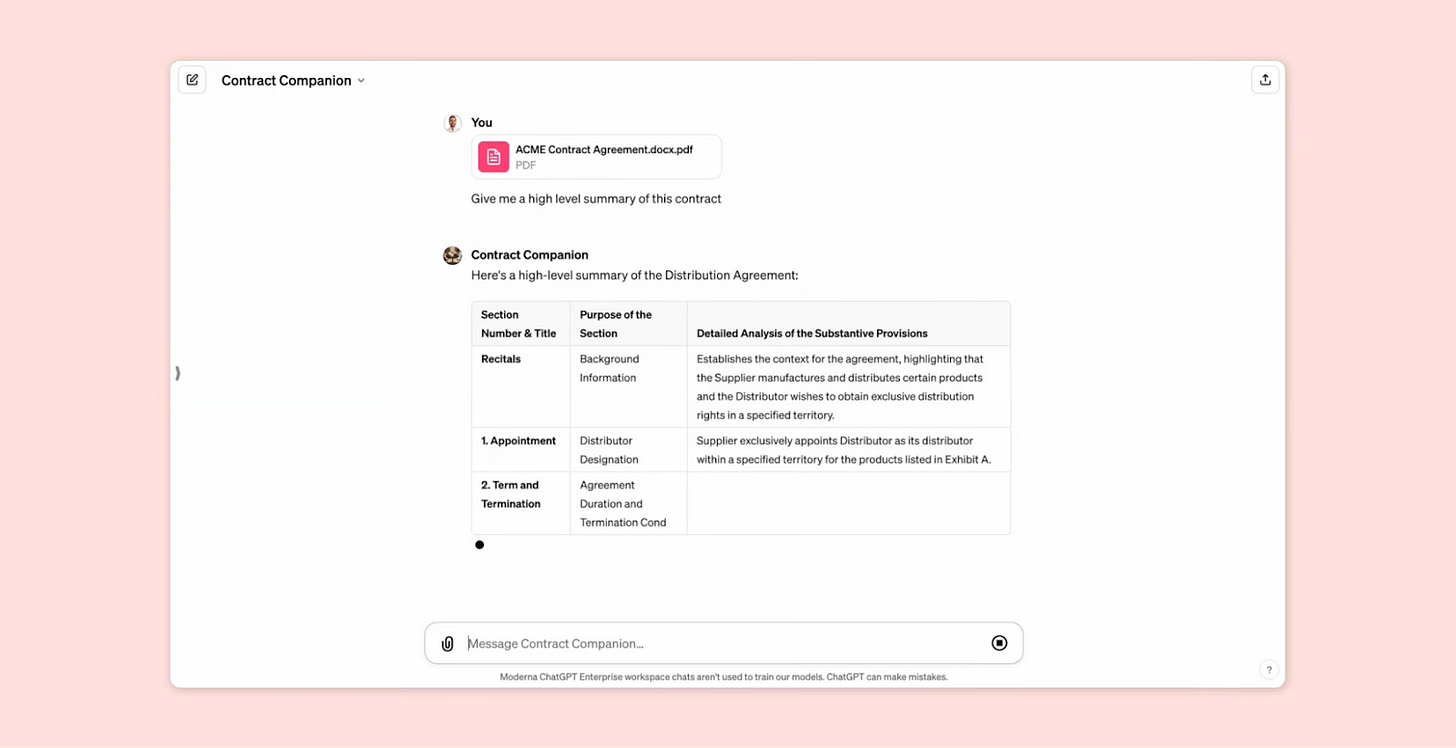

📑 Contract Companion - generates clear summaries of legal contracts

📜 Policy Bot - provides instant answers on company policies

📈 Earnings Prep Assistant - crafts slides for quarterly investor calls

🗣️ Brand Storyteller - tailors company messaging for different audiences

Our Take: This is one of the first public case studies by OpenAI on a large regulated enterprise adopting Generative AI *at scale*. Despite slower industry adoption due to talent shortages, data management challenges, and risk concerns, Moderna’s successful use cases could help inspire accelerated adoption of Generative AI in other regulated enterprises. However, we were disappointed not to see any mention of governance and transparency discussions in this case study, especially since it’s a highly regulated pharmaceutical field. As Generative AI use and governance expand, transparency will become essential, not optional.

3. New Transparency Ratings for Microsoft’s Phi-3 and Snowflake’s Arctic

Our Model Transparency Ratings now contain ratings for Microsoft’s Phi-3 and Snowflake’s Arctic models. Notably, both models focus on efficiency: Phi-3-Mini is small enough to run on a smartphone; Arctic is larger but trained using less compute than models of comparable quality. To enable efficiency, the developers of both models discuss creating a “training curriculum” for the model - i.e. a curated, high-quality training dataset. This approach contrasts some other models that focus on using the largest dataset possible.

In terms of transparency, both models received a High Overall and Model Transparency scores. Both providers excel at documenting the models’ architectures and training process. Interestingly, despite emphasizing computational efficiency, Arctic did not publish the computational resources (e.g FLOPs, Hardware-type or total training time). While Arctic will likely be exempt from the EU AI Act, publishing this information has become the standard even among less transparent models.

For Data Transparency, Phi-3 got a Medium Rating, and Arctic got a High Rating. Notably, Phi-3 uses a new synthetic dataset generated via an LLM, but does not state which model or prompts were used. While the model is released under a permissive MIT license, without understanding the training data, it is hard to fully understand a model’s capabilities and safety. On the other hand, Arctic, released under the Apache 2.0 license, shines in this area by listing out all the datasets used and explaining how they were filtered prior to use. However, this dataset is not mentioned at all on the model’s Hugging Face page; ideally, a summary would be included to give developers a quick reference without digging deeper into the documentation. One of the goals for our AI Model Ratings was to summarize this information in one location, but we would like to see all providers publish this data in a consistent manner.

Key Takeaway: Phi-3 and Arctic are relatively transparent compared to many other open-source options; however, both contain gaps and fail to document details in a consistent format.

4. Connecticut Moves Closer to Comprehensive AI Law

On April 24, 2024, the Connecticut state senate passed SB 2, which takes a similar approach to regulating certain AI systems as the EU AI Act. Specifically, SB 2 takes a risk-based approach to regulating AI systems that requires deployers and developers of high-risk systems to assess and manage risks stemming from “algorithmic discrimination.” Under the proposed law, algorithm discrimination covers a range of risks that arise from disfavorable or negative differential treatment of particular groups of individuals based on certain characteristics (e.g., age, race, or religion).

The bill imposes a wide-range of obligations on high-risk AI deployers. First, deployers of high-risk systems must adopt an AI risk management policy and program, which must consider, among other things, the latest iteration of the NIST AI Risk Management Framework. Second, they must also conduct an initial impact assessment of high-risk systems and complete subsequent impact assessments for any “intentional and substantial modifications” made to the systems. Finally, deployers using high-risk systems as a controlling factor in, or to make, a consequential decision (e.g., creditworthiness, employment opportunities, or housing) must disclose information to those individuals subject to the decision.

While obligations are also imposed on developers of high-risk systems, they are subject to a narrower set of requirements. Developers of high-risk systems must provide deployers with documentation that discloses certain information about the high-risk system, such as the intended use(s), reasonably foreseeable risks, measures to mitigate those risks, and the types of data used to train the system. Additionally, developers must publicly disclose the types of high-risk systems they develop, substantially modify, and make available to deployers, as well as how the developers manage known or foreseeable algorithmic discrimination risks from its high-systems.

If passed, the law would be exclusively enforced by the Connecticut Attorney General.

Our Takeaways: SB 2 is a major step forward for state-level regulation in the U.S. While we noted last year that state legislatures addressed AI-related legislation, no state passed comprehensive AI regulations. The proposed Connecticut law would be the first of its kind in the U.S. and would likely serve as a baseline for other states. However, final adoption of the bill is not a foregone conclusion, as Governor Ned Lamont voiced concerns over the legislation’s potential impact on innovation. It is also worth noting that the California state legislature put forth legislation similar to SB 2, but is still being discussed at the committee level.

5. NIST Releases Generative AI Risk Profile and GenAI Testing Tools

The National Institute of Standards and Technology (NIST) released several publications this week, meeting its 180-day requirements from the Biden AI Executive Order. Publications included first a Risk Profile for Generative AI that outlines what steps organizations can take to implement the NIST AI Risk Management Framework for Generative AI, guidelines for detecting synthetic content, and security recommendations for general purpose AI systems. The documents were released in draft form, and are open till comments until Jun 2, 2024. In addition to the guidance documents, NIST has also announced a GenAI detection competition, where companies can submit both generative AI models, and synthetic content detection models to compete against one another in order to study best practices for detecting generated content. More details can be found at: https://ai-challenges.nist.gov/genai

Key Takeaways: NIST continues to be the world’s leader on AI safety research and guidance, and its outputs are expected to increase given recent funding and new leadership.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team