How will the US elections shape AI policy?

Plus: what does AI monitoring mean, what are risks related to coding copilots, and LATAM AI developments.

Hi there! With Republicans winning the White House, Senate, and House of Representatives in the most recent US elections, questions are emerging about what this means for AI policy in the US. Our main story today highlights 7 areas we think you should be paying attention to.

In today’s edition.

How will the US elections shape AI policy?

What is AI Monitoring?

Is AI-generated code secure?

AI developments in Latin America

1. How will the US elections shape AI policy?

Donald Trump’s election as the 47th US President, along with a new Republican majority in the US Senate and the House of Representatives, raises some important questions about the future of AI regulation. There is uncertainty about AI-related policy given several contradictions from President-elect Trump and his closest advisors. Here are a few of our thoughts on what may happen:

President Biden’s AI Executive Order (EO): The official GOP platform calls for the full repeal of the AI EO, primarily over objections over the use of the Defense Production Act to compel AI companies to provide information about testing practices. We expect that formally repealing the EO is merely window-dressing, as much of the EO has already been executed and embedded into various agency guidelines. Moreover, a replacement EO may not be much different from the current version, given the first Trump administration enacted a Trustworthy AI EO that called for some similar workstreams.

AI Safety Institute: Unfortunately, one element of President Biden’s AI EO that may not be protected is the AI Safety Institute. The Institute was not created by legislation, which means repealing the EO would effectively end the Institute’s work. We think there is still a chance to codify the Institute during the lame-duck session, as it has bi-partisan support in Congress.

Agency Actions: The Federal Trade Commission (FTC) has been one of the more active US federal agencies tackling AI issues under the context of consumer protection and antitrust investigations. While the fate of FTC Chair Lina Khan is unclear (Vice-President Elect JD Vance has notably championed Commissioner Khan), it’s unlikely that a conservative led FTC will be as willing to take AI regulation onto itself without explicit Congressional sanction to do so. Separately, President-elect Trump’s EPA pick mentioned US leadership in AI in his first remarks about the position. This likely highlights the incoming administration’s priorities concerning building a robust energy sector to support accelerated AI development and use.

Tariffs on AI Materials: One of President-elect Trump’s key campaign promises was to create tariff barriers on many imported goods. While many US AI hardware systems are designed in the US, they are often manufactured overseas and then imported back to the US. It’s possible that AI components receive some tariff exemptions, but without it the cost of building key AI related infrastructure could soar even higher, further entrenching Big Tech which can afford the higher costs.

National Security: Competition with China continues to be a priority for President-elect Trump, and his early foreign policy administration picks underscores that. This includes competition in AI and ensuring that AI is used to its full potential for national security purposes. We expect to see policies that encourage AI adoption in the national security sector. Risk management frameworks like the one recently published by the White House can actually help with this because it gives both buyers and sellers some common framework to work off of.

Federal vs State Legislation: The probability of sweeping federal AI legislation akin to the EU AI Act is unlikely, but that will cause more left leaning states to try and regulate AI themselves (i.e., Colorado and California). The recent selection of Sen. John Thune (R-SD) as incoming Senate Majority Leader, along with a potential GOP trifecta, could lead to some bi-partisan movement on federal AI-related legislation.

The ‘Musk’ Factor: Elon Musk seems to have quickly become one of Trump’s leading advisors on many issues, and even spent election night with Trump, and participated in major foreign policy calls with the President-elect. Musk has had varying positions on AI, and himself owns a large AI company (xAI). Musk previously called for AI regulation, and actually endorsed the California Frontier Model bill (SB 1047) before it was vetoed by Democratic Governor, Gavin Newson. We anticipate Musk having a large influence in how AI regulation takes shape in the new administration, which could mean less emphasis on many of the ‘guardrails’ championed by other Big AI players like Anthropic and OpenAI.

Our take: Strap in.

2. What is AI monitoring?

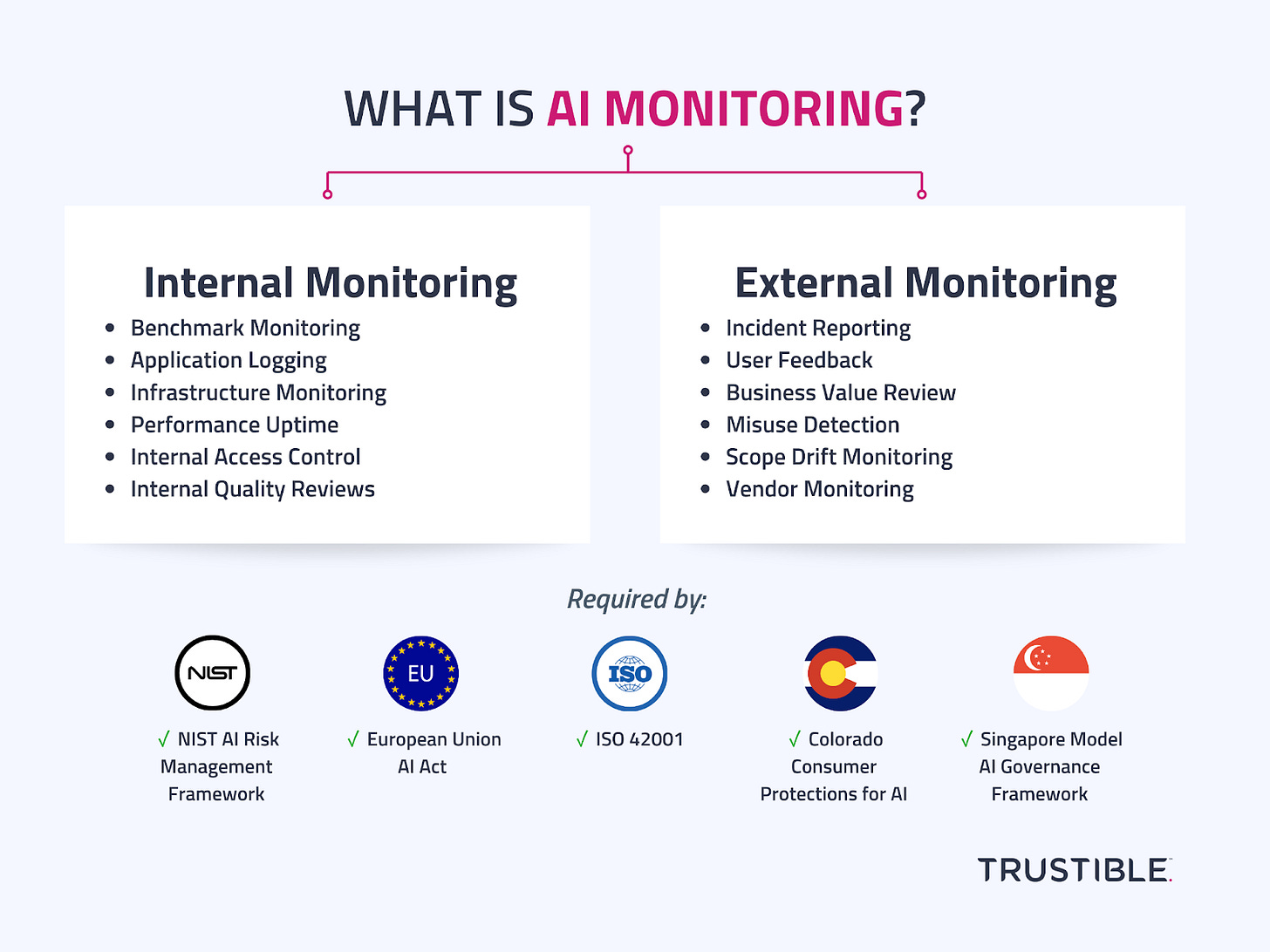

A common compliance requirement across many AI regulations and voluntary AI standards is the need to monitor AI systems. However, the term ‘monitoring’ can be overloaded. Moreover, many technical teams misinterpret the regulators’ intention and believe that the requirement is simply to track the logs and input/output metrics of an AI model. While this type of monitoring is undoubtedly beneficial and necessary, there are other forms of monitoring that are required.

In our latest blog post, we discuss the key internal and external monitoring forms that organizations must have in place for compliance with emerging AI governance frameworks.

Internal monitoring focuses on the technical health and performance of the AI system, while external monitoring involves gathering and responding to real-world feedback and usage data. Key AI regulations and frameworks, such as the NIST AI RMF, ISO 42001, the EU AI Act, Colorado's AI bill, and Singapore’s Model AI Governance Framework emphasize the importance of both internal and external monitoring. Responsible AI deployment and regulatory compliance require implementing both forms of monitoring.

Read our latest blog post here.

3. Is AI-generated code secure?

AI code generation tools (including coding copilots) have gained widespread adoption, with Google stating that over 25% of their new code is generated by AI. While these tools increase efficiency, they heighten organizations’ cybersecurity risk, because AI generated code may contain vulnerabilities and be subject to decreased scrutiny due to automation bias (i.e. a propensity to believe outputs of an AI system). A recent study from CSET found that leading LLMs often generate buggy, exploitable code, but it focused narrowly on test cases likely to produce poor outputs. The authors highlighted difficulties in benchmarking cybersecurity risks due to the broad, evolving nature of vulnerabilities and challenges in detecting insecure code.

While LLMs can generate insecure code, the impact of this risk needs to be considered within the context of the organization’s existing cybersecurity practices. LLMs generate insecure code partly because they train on open-source repositories and coding forums that contain poor practices. Since human developers often reference the same sources, organizations already employ code reviews, scanning tools, and package restrictions. These practices should extend to AI-generated code.

Moreover, AI code generation presents unique challenges:

LLM’s code generation abilities have led to multiple low-code applications that allow new developers and small businesses to quickly build and ship projects. In this context, extensive cybersecurity practices may not be in-place, and users will be particularly vulnerable.

AI Systems may be subject to additional vulnerabilities beyond bad training data that are harder to detect and prevent. For example, a Copilot that references external documentation may ingest malicious instructions.

Insecure AI-generated code from this generation of models will become training data for the following generation of models. It is unclear if vulnerabilities will be exacerbated over time.

Key Take-aways: AI-generated code can contain many vulnerabilities, but they are difficult to quantify under realistic use conditions. Organizations with extensive cybersecurity risk controls may not see significant impacts; in contrast, users of low-code AI-powered software may be particularly vulnerable to new cyber threats. Currently, the burden of validating code for insecurities falls entirely on the end user; new policy and cybersecurity guidance will be required to cover the gaps.

4. AI Developments in Latin American

Over the past year, the U.S. and EU dominated the conversation about responsible AI development and oversight. The EU AI Act’s entry into force, as well as efforts by the US federal and state governments, eclipsed AI-related work being done in other parts of the globe. The global south, specifically Latin American countries, are working both regionally and individually to advance AI innovation and oversight.

Broadly speaking, Latin American countries are cooperating on a regional approach to AI governance. In August 2024, 17 Latin American countries adopted the Cartagena Declaration on AI governance. The Declaration reaffirmed a commitment to regional cooperation on AI development, promised to share AI best practices and expertise, as well as pledged to develop AI in accordance with existing AI ethical frameworks. Additionally, representatives from Brazil, Chile, and Uruguay recently met with experts in the EU on AI technology and policies. The meetings are intended to improve cooperation on digital technologies between the EU and Latin America.

Beyond regional cooperation, at least three Latin American countries have proposed legislation to regulate AI use and development. Brazil (Bill No. 1465/2024), Chile (Nr. 16821-19), and Peru (Draft Law 07033/2023) have proposed bills modeled after the EU AI Act, which seek to balance AI regulatory oversight with encouraging innovation. Generally, the proposed laws follow the same risk-based approach as the EU AI Act but are generally less prescriptive.

Our Take: Cooperation among culturally similar nations has the potential to help AI address gaps in training data and improve performance from non-western cultures. Additionally, we may see regulatory approaches emerge from places like Latin America that can provide alternatives to the more prescriptive EU AI Act.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team