The Trust Gap Will Be The Biggest Barrier Towards AI Adoption

Plus, EU AI Act adoption, smaller AI models, and privacy teams owning AI governance

Hello! Let’s get right to it. Here’s what we’ll cover today (7 minute read):

The Trust Gap Will Be The Biggest Barrier Towards AI Adoption

EU AI Act Crosses The Finish Line

The Quest to Make AI Models Smaller

AI Software Meets Hardware

Privacy Professionals Are Being Asked To Own AI Governance

Want to learn more about Trustible? Watch this 2-minute video and contact us if you want to get a demo of our Responsible AI Governance Platform.

–

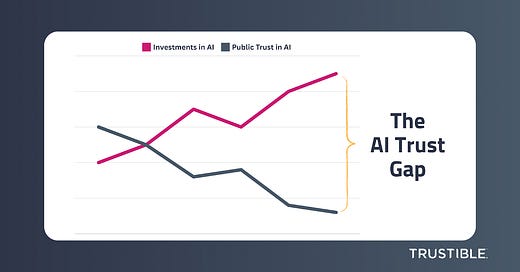

1. The Trust Gap Will Be The Biggest Barrier Towards AI Adoption

It’s no secret that investment in AI is increasing. Over a quarter of all venture capital investments in 2023 went to AI companies, compared to only 11% in 2018. In February 2024 alone, over $4.7Bn was invested in this category, over twice as much as was invested merely a year ago. Nvidia, largely seen as the company leading the AI revolution, has seen a 226% increase in their stock price over a two year period. Meanwhile, TD Cowen, an investment bank, estimates that generative AI software spending will boom from $1 billion in 2022 to $81 billion in 2027, representing a 190% five-year compound annual growth rate.

However, despite the positive trends for AI investments, public trust in AI is in a freefall. According to Edelman, global trust in AI companies has declined over the past five years from 61% to 53%. In the US, there has been a 15-point drop from 50% to 35% – a mistrust shared equally by Democrats, Republicans, and Independents. Interestingly, people in emerging economies (such as India, China, Saudi Arabia, and Nigeria) are more likely to embrace AI technologies compared to people in developed markets (like the US, UK, France, or Australia).

Why This Matters: This trust gap will be the main barrier towards AI adoption. Respondents to the Edelman survey who are less than enthusiastic about the growing use of AI said that they would feel better about it if they understood the technology better, were sure that businesses would thoroughly test AI, and knew that those adversely affected would be considered.

In other words, if organizations implemented and scaled AI governance programs, they would trust and embrace the technology more.

2. EU AI Act Crosses The Finish Line

On March 13, 2024, the European Parliament adopted the EU AI Act by a vote of 523 to 46, with 49 abstentions. Last week's vote was the final legislative hurdle for the world’s first comprehensive AI regulatory regime and came almost a month earlier than originally expected. The text will undergo a final legal review before the end of the legislative session, as well as be formally endorsed by the European Council – a mere technicality in its final approval.

What’s to Expect Next: The EU AI Act will officially enter into force 20 days after publication in the Official Journal of the European Union. The expected timeline anticipates an entry into force date by May 2024. The first set of obligations under the law, which prohibits certain AI practices and systems, would take effect by the end of 2024 or early 2025. Obligations related to general purpose AI systems and high-risk AI systems would come into effect around May 2025 and May 2027, respectively.

3. The Quest to Make AI Models Smaller

Many foundational model creators over the past year have raced to build progressively larger models. However, when it comes to the business attributes of AI, bigger isn’t always better. While larger models with more parameters may exhibit enhanced performance, they cost more to train and, more importantly, cost more to run for inference. In addition to the costs, the actual hardware limitations for these large models exclude entire sets of use cases on mobile, embedded, or consumer hardware. In these domains, the size of the model is seen as a bug, not a feature.

The most recent language models announced, such as Claude-3 and Google Gemini, have included a ‘miniature’ version intended for use on smaller devices. Google is apparently in discussions with Apple to license their Gemini Nano model for iPhones, and already made a similar deal with Samsung last year. Many mobile apps currently rely on using APIs to interact with larger AI models hosted in the cloud, but this can come with serious reliability, and speed issues. For business use cases, smaller models trained on sector-specific datasets can be more efficient and effective.

In addition to opening up new embedded use cases, model creators may want to avoid creating new models that trigger the ‘systemic’ general purpose AI model requirements of the EU AI Act. While the newly created AI Office will have the power to designate models as ‘systemic’ based on their capabilities, the initial proposed criteria for the designation is based on the total amount of compute used to train the model. Smaller models will likely be seen as ‘less capable’, even if their performance on AI benchmarks is not significantly different, and thus potentially subject to less regulatory scrutiny.

Our Take: These three reasons (cost, performance, and regulatory scrutiny) are why we expect to see a race for smaller models that still performs similarly on AI benchmarks as larger models. This race will enable model providers to fit capable generative AI model onto mobile devices.

4. AI Software Meets Hardware

Speaking of models embedded in devices… AI models are starting to get implemented more and more into hardware devices. Figure, a robotics startup valued at $2.6 billion, announced last week the integration of OpenAI models into its humanoid robot which can pick up trash and put away dishes while having a conversation with humans. Meta’s Ray Ban smart glasses have features for people to ask questions based on what they see. For example, you can look at a historical building through your glasses such as the US Capitol or the Eiffel Tower and ask for facts about it. Humane AI is a standalone, screenless device that can attach to your clothes that users can interact with through voice commands.

Why This Matters: If you own Responsible AI at your organization, it’s not just software AI vendors you need to evaluate. The above examples are mostly consumer products, but AI-enhanced hardware and devices will permeate your organization. From advanced robotics in your facilities, to AI-enabled check-out counters and IOT devices, expect that AI will simply power many of our daily experiences in both the physical and digital worlds.

5. Privacy Professionals Are Being Asked To Own AI Governance

In two weeks, thousands of data privacy professionals from around the world will descend on Washington DC for the annual International Association of Privacy Professionals (IAPP) Global Privacy Summit. The IAPP has been leading efforts to professionalize AI governance at organizations with their AI Governance Professional Certification program with the first certification exams scheduled for early April.

Many privacy professionals who have helped organizations navigate the complexities of data privacy regulations such as GDPR and CCPA are now being tasked with preparing their organizations for compliance with the EU AI Act and similar proposed regulations. To understand what challenges privacy professionals face when retraining to own AI governance, Trustible partnered with researchers at Harvard University to survey privacy professionals who have successfully made the transition. Here are a few key insights from the white paper, which you can download here:

AI can be value generating for businesses, and therefore the timelines and set of stakeholders involved may be more aggressive

Technical upskilling may be particularly difficult given the speed of AI development

Existing AI development practices are often very immature and shifting the culture of AI development teams is difficult

AI risks and harms is highly contextual and the use case determines a lot of the potential risks

Trustible is sponsoring the IAPP Privacy Summit in DC. If you’ll be there, please swing by our booth (#434) and say hello!

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team