What does AI Regulation look like under a Trump/Vance Administration?

Plus, measuring the ROI of AI use cases, promises and challenges with RAG, the EU AI Act comes into effect, and Supreme Court's impact on AI

Hi there! We hope that those of you in the Eastern and Central United States are staying cool during this heatwave. In today’s edition:

Supreme Court decisions raise questions over the future of Federal AI policy

How Can Organizations Measure the ROI of AI Investments?

How to get started with EU AI Act Compliance

The Promises and Challenges of RAG

What does AI Regulation look like under a Trump/Vance Administration?

In case you missed it: Trustible was recently selected for Google for Startups funding! Read more about the announcement here.

1. Supreme Court decisions raise questions over the future of Federal AI policy

The U.S. Supreme Court concluded its 2023-2024 term with a number of high-profile decisions that will impact federal agencies’ rulemaking and enforcement authorities. The most notable case is Loper Bright Enterprises et al. v. Raimondo (Loper), which overturned a 40-year doctrine known as Chevron deference. Prior to the Loper decision, courts generally deferred to a federal agency’s reasonable interpretation of ambiguous language in a federal law. The Court’s decision in Loper reverses this, saying that courts must conduct an independent analysis of a law to determine if the federal agency acted within its authority, rather than defer to the agency’s interpretation.

The Supreme Court issued two additional decisions, which may have gone unnoticed but could have far-reaching impacts nonetheless. In SEC v. Jarkesy (Jarkesy), the Court curtailed the SEC’s enforcement authority when it sought civil penalties from those accused of committing securities fraud. The Court held that the SEC’s internal administrative enforcement process for alleged securities fraud violated a person’s constitutional right to a jury trial. Jarksey may set the stage for challenges to similar internal enforcement mechanisms at other agencies, which include the Federal Trade Commission.

In Corner Post, Inc. v. Board of Governors of the Federal Reserve System (Corner Post), the Court extended the time frame in which a lawsuit can be filed under the Administrative Procedures Act. The previous time frame for a suit to be filed was six years after a rule was finalized. The Court’s decision in Corner Post allows a suit to be filed within six years after the party bringing the suit alleged a harm occurred. The Corner Post decision could inject uncertainty over the finality of regulations given the new window for legal challenges.

Our Take: Taken together, these cases represent a significant restriction on Executive Branch authority. Within the context of a federal AI policy, a federal law would need to be clear about the authorities of the implementing federal agencies or risk significant legal challenges to subsequent regulations. Even in the absence of a federal law, existing federal agency authorities that may be leveraged to regulate AI could be significantly curtailed. This level of uncertainty at the federal level is likely to nudge more states towards their own AI rules.

2. How can organizations measure the ROI of AI investments?

The high cost of AI training, staff, infrastructure, and deployment is resulting in public scrutiny by investors, Boards, and executives.

In a recent report, the investment giant Goldman Sachs questioned whether Generative AI’s promise for transformation justifies the trillions of dollars in AI capital expenses in “the coming years.” In their view, lack of revenue coming from AI initiatives (estimated to be between $5-10Bn in 2024) is still a huge gap from the $500Bn spent in AI infrastructure.

From our conversations with organizations, we continue to see sustained enthusiasm in AI adoption. However, we still expect organizations to receive increasing pressure from their Boards to justify the value of their GenAI expense.

As such, we’ve come up with a simple framework to calculate the Return on Investment (ROI) of AI use cases.

Calculating Revenue Benefits

Total gains from market expansion, including, new market entry, upselling, and and new revenue streams

Total gains from performance improvement, including improved analytical capabilities and customer retention

Total cost savings, including from from resource optimization and efficiency improvements

Calculating Additional Costs

Total initial investment costs, including software licensing, hardware, and cloud infrastructure fees

Total operational expenses, including data acquisition costs, and maintenance costs

Total personnel costs, including hiring, staffing, training, and change management programs

We’re building features on our platform to comprehensively quantify the ROI of AI use cases to help you make better decisions with respect to resource allocation on AI initiatives. If you’re interested in learning more, reach out to us at contact@trustible.ai

3. How to get started with EU AI Act Compliance

On July 12th, Regulation 2024/1689 was published in the Official Journal of the European Union, making the EU AI Act an official law. While there are a lot of portions of the act that will need clarifying from the newly created EU AI Office, there are some common steps organizations can take right away to prepare for compliance with the act. Here are a few actionable steps organizations can take now:

Create AI Governance policies and organizational structures

Who is in charge of AI Act compliance? Your privacy officers? Cybersecurity and IT teams? Legal or compliance teams? All of the above? Every organization that will either deploy or provide high risk or general purpose AI systems will need to have clear policies in place to ensure they are able to identify relevant systems, and then implement the AI Act’s risk management, quality assurance, and post market monitoring requirements (among others). Trustible provides both policy templates, and guidance for how to get started on these policies today.

Start building an AI Inventory

Which of your AI systems are high risk? What vendors and models are you using, and what will their obligations be? Building an inventory will take time, and building a process for collecting and maintaining the information now, will save organizations time in the long run as their use cases multiply. One key question your inventory should answer is what models are being used by your organization, and whether those models themselves are compliant with the AI Act’s GPAI requirements. Trustible provides an evaluation of which models are already compliant, and can help organizations ensure any new models they adopt are likewise compliant.

Identify and implement AI literacy training

Article 4 of the AI Act mandates AI providers and deployers ensure they have appropriate AI literacy training and support for relevant personnel. While the language is vague, Recital 20 further clarifies that all actors in the AI value chain need to be equipped with the training and education necessary to oversee or responsibly use AI systems. Stay tuned for how Trustible can help organizations implement the necessary training and education!

Key Takeaway: The AI Act regulates the people and processes used to develop and deploy AI, more than the technology itself. As a result, organizations can start building up the policies, processes, and personnel who will be responsible for compliance right away. Learn more about how the Trustible platform can help you comply with the EU AI Act.

4. The Promises and Challenges of RAG

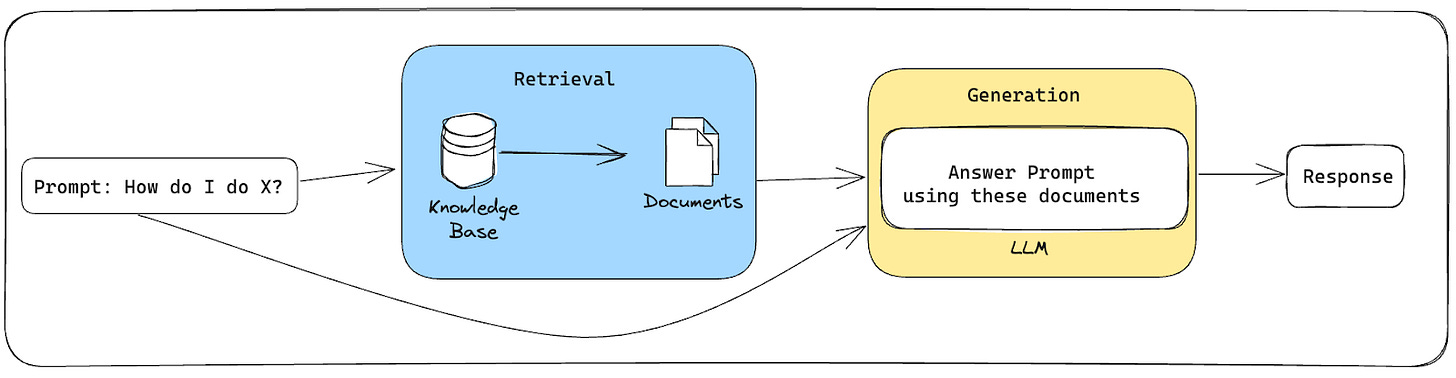

Retrieval Augmented Generation (RAG) is often touted to resolve three main challenges of using LLMs for enterprise use cases:

Incorporating Organizational Knowledge

Reducing Hallucinations

Keeping information up-to-date

In a RAG system, the user’s prompt is used to find relevant documents (typically using a vector search) in a knowledge base (KB), and then these documents are passed into the LLM alongside the original prompt with instructions to answer to address the prompt only using the documents. This framework can help alleviate all three challenges, but it does not eliminate them entirely and introduces new risks. The areas of risks can be broken down into the following categories:

Knowledge Base Contents: For RAG to work, the KB needs to maintain up-to-date and accurate information. Documents that are no longer relevant need to be removed; sensitive or private information needs to be sanitized before documents are added to the KB; security needs to be considered, as well, to avoid Indirect Prompt Injections.

Retrieval Errors: To generate an accurate answer, the right documents need to be collected during the Retrieval step. This is not guaranteed, especially if the user prompt is vague or many similar documents exist. Separating “Retrieval” errors from “Generation” errors requires a sophisticated development and evaluation process.

Generation Errors: Even given the right retrieved content, LLMs can hallucinate, so additional mitigations should still be incorporated.

For a deeper discussion of these challenges, review this article. Despite these challenges, a clear alternative does not exist in many cases. Fine-tuning (i.e.additional training of an off-the-shelf LLM on custom data) resolves some of the initial challenges, but can be a larger engineering lift and require regular retraining on the newest data. While some SotA LLMs can handle a context window of up to one million tokens, processing the whole KB at once and eliminating the retrieval step is expensive and slow. Overall, RAG is a strong solution for many AI challenges, as long as outlined risks are considered.

Key Take-away: RAG may be a standard part of AI systems going forward, but this framework does not fully eliminate risks of Hallucination and introduces new ones around security, privacy and evaluation.

5. What does AI Regulation look like under a Trump Administration?

With the prospect of a second Trump administration becoming increasingly likely, it’s worth looking at what AI Regulation could look like in the US should the Trump/Vance ticket win. A few recent events provide some insights into what could happen, and who the opinion makers will be:

GOP Party Platform

The recently adopted GOP party platform mentions repealing the Biden administration's executive order and ensuring AI promotes ‘free speech’ and ‘human flourishing’ (whatever that means). However, the main GOP objection to the executive order has been rooted in its use of the Defense Production Act to require frontier model testing evaluations. Many other parts of the Executive order actually mirrors Trump’s own executive order on AI from 2020, and most portions of Biden’s order will already be complete by the time Trump would take office. Many of Trump’s congressional allies have sought to formalize several parts of the executive order, including the creation of the AI Safety Institute, into law, so while a formal revocation may happen for political optics, many of the policy outcomes may be the same.

J.D Vance & VC backers

Trump’s selection of J.D Vance as his running mate, and Vance’s deep connections with leading Silicon Valley venture capitalists, including Elon Musk and Peter Thiel, provides clarity on what a second Trump administration’s AI priorities may be. Back in March, Vance posted on X (formerly Twitter) about AI bias, but in the context of AI promoting liberal values. This highlights a common theme where both Democrats and Republicans will talk about AI bias, but mean radically different things. Musk, who regularly shares his views on AI, recently announced he’ll be contributing over $45 million per month to Trump aligned SuperPACs. It’s hard to see that level of financial support not influencing administration policies.

GOP Congressional Leaders

While several GOP Senators have introduced bipartisan legislation to help promote AI development, and fund several efforts by NIST, many GOP aligned think tanks are pushing back more on any preemptive regulations, and deference to agencies recently took a big hit at the Supreme Court. In addition, GOP house leaders have signaled they may not want to move aggressively in the short term on AI.

Key Takeaway: There is still a chance of a federal AI regulation happening in the next session, if for no other reason than to preempt the actions of more liberal leaning states such as California and Colorado. However the shape and structure of this legislation could look very different from recent proposals. These regulations may look to define ‘AI bias’ not in terms of preventing racial discrimination in AI, but rather in preventing promotion of perceived ‘woke’ values.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team