What’s the difference between AI risk and AI harm?

Plus, insights on AI bias, AI stakeholders, and AI procurement. Plus, AI wins big at this year’s Nobel Prizes.

Hi there! Trustible was recently selected by CIOReview as a Top 20 AI Solution Providers. Read the in-depth profile on Trustible here.

In today’s edition:

What’s the difference between AI risk and AI harm?

What to do when you find AI Bias

OMB issues AI Procurement Guidelines

Understanding AI Stakeholders with Trustible’s AI Stakeholder Taxonomy

AI Researchers wins Nobel Prizes

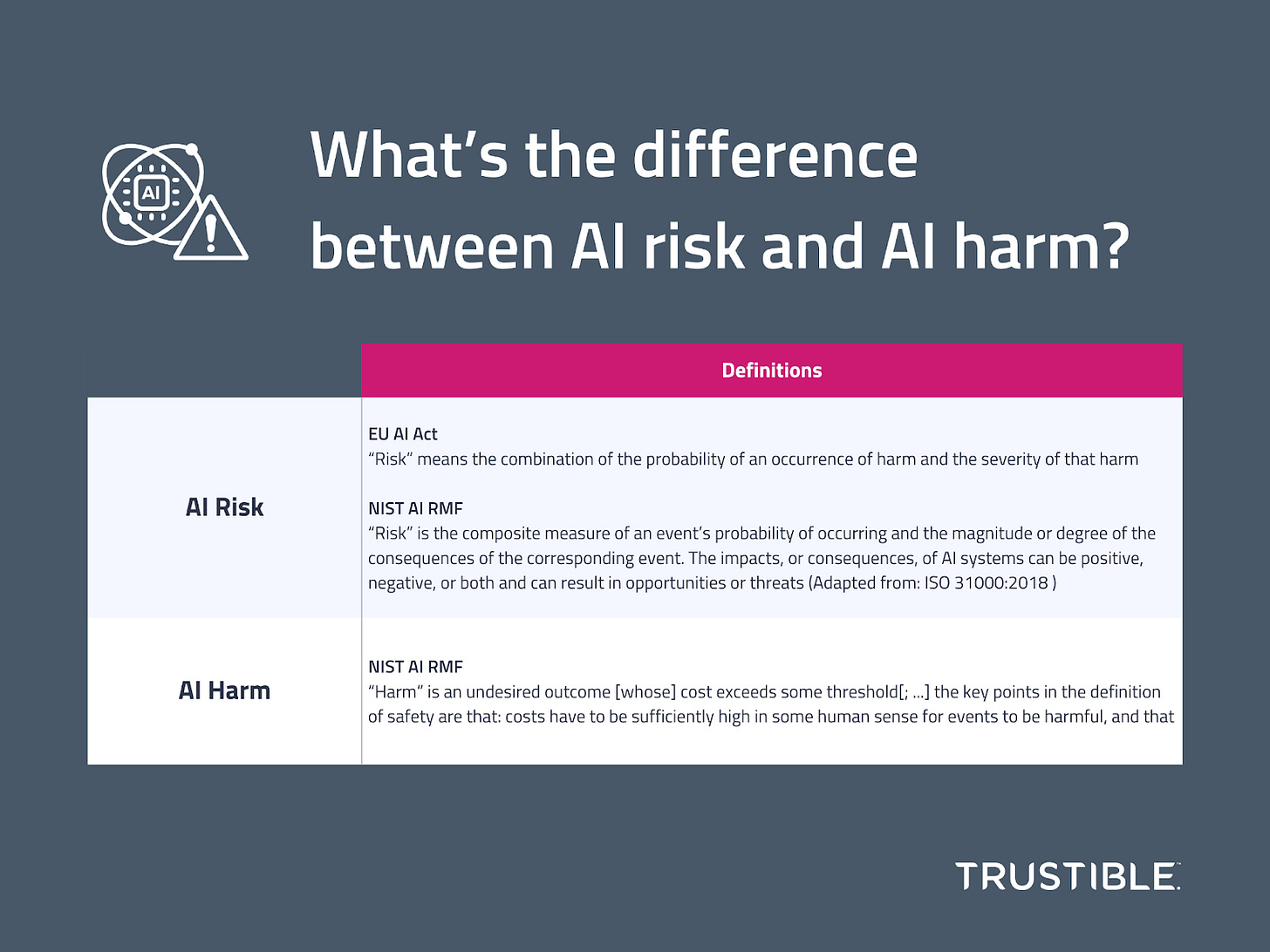

1. What’s the difference between AI risk and AI harm?

A notable challenge that organization’s face in implementing AI Governance structures is attempting to decipher unclear terminology applied to AI-specific contexts. A notable example are the terms “risk” and “harm,” which are referenced inconsistently by AI frameworks and regulations. While certain AI System behaviors can be considered both risks and harms (e.g., a loss of revenue), a harm is more typically an outcome of one or more risks being realized (e.g. a robot causing physical harm may result from multiple points of failure).

Interestingly, the EU AI Act only defines risk but indicates that harm is a separate value to measure, as the definition references the occurrence and severity of a given harm. Conversely, NIST’s glossary of AI terms provides separate definitions for risk and harm, with the former measuring potential impacts (i.e., negative and positive) while the latter considers a threshold for causing some form of injury. This discrepancy can be a challenge for organizations that want to create consistent documentation processes.

Context is also key when considering risk and harm within these AI governance frameworks. Organizations will likely conduct risk assessment and an impact assessment, and the type of assessment will help demonstrate the difference between risk and harm. The main focus of the AI risk assessment is to understand the AI system’s primary purpose and usage. The “risk” being assessed is the likelihood of an issue (e.g., legal or performance) arising with the system. Compare this to an impact assessment. Arguably, the impact assessment is the logical offspring of the risk assessment. Once the likelihood, or risk, of an issue occurring is assessed, an organization needs to consider the harm the issue can cause. While an impact assessment will measure the “risk” of the harm occurring, it is primarily focused on documenting the universe of reasonably foreseeable harms that can occur.

Our Take: Risk and harm are oftentimes used interchangeably to describe negative outcomes from an AI system. When we think about issues that can arise with AI systems, we may be more concerned with the real-world ramifications of the issue rather than parsing out differences in terminology. Yet, building robust AI governance structures requires us to understand the distinction between AI risks and harms.

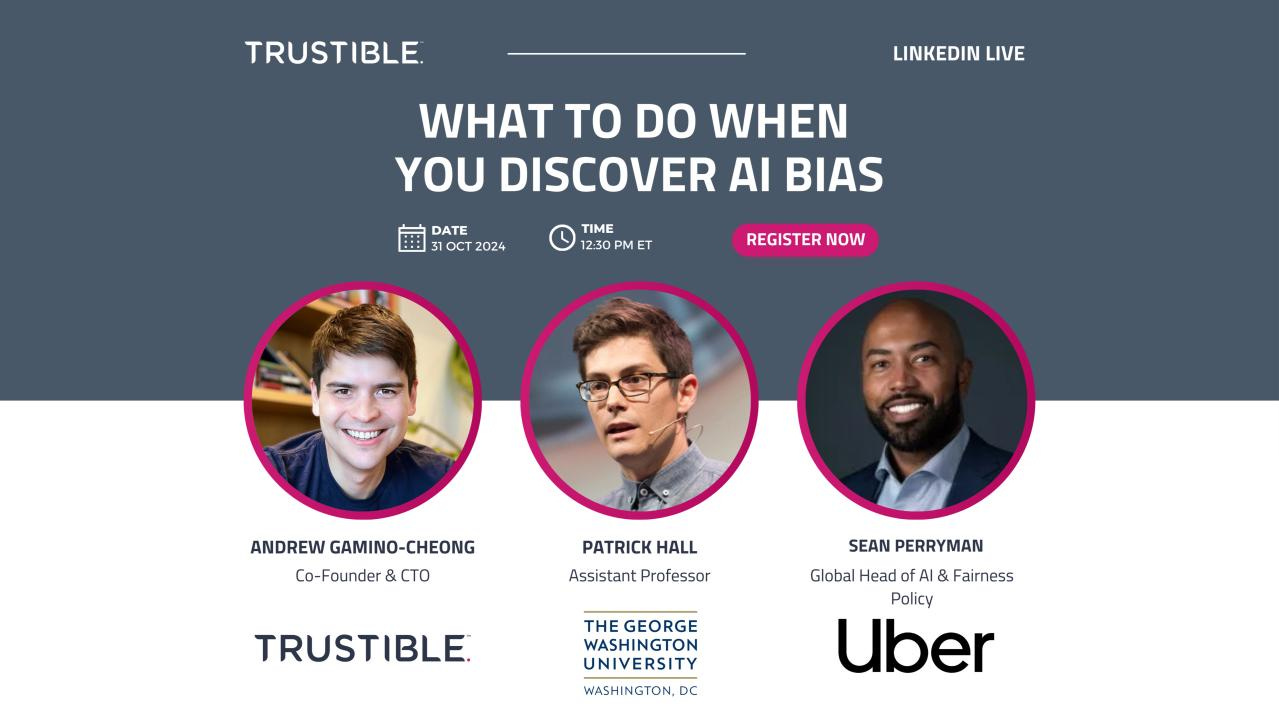

2. What to do when you find AI Bias

Everyone likes to talk about creating unbiased and fair AI, and this is reflected in many regulations. AI systems are built on training data collected from a very biased world, and the definitions of what bias is differs based on cultural, socio-economic, or even ideological grounds. While defining and measuring AI bias can be difficult on its own, an even more challenging question is what to do when (not if) you discover it in a system. Options range from collecting more training data, changing the model structure, shutting down the project entirely, or perhaps simply disclosing the bias and releasing it anyway.

To dig deeper into a discussion of these issues, Trustible is hosting a LinkedIn Live webinar with 2 leading voices in this space: Patrick Hall, Assistant Professor at The George Washington University and lead contributor to several NIST AI publications, and Sean Perryman, Global Head of AI & Fairness Policy at Uber. Join us on October 31, at 12:30pm ET to learn more about this subject! Sign-up Here!

3. OMB issues AI Procurement Guidelines

The majority of organizations will have neither the financial incentives, nor deep technical expertise, to create highly capable AI models. As a result, the majority of AI deployments will be done with AI that was bought, not built. This leaves the procurement process as the main area where many organizations will enforce their responsible and ethical AI practices. Many regulations, such as the EU AI Act, come with some enforcement measures, but regulation will only ever set a ‘floor’ for responsible AI practices, and it will take competitive market pressures to raise the ‘median’ set of practices, and enforcement of these will be done primarily through an AI procurement process.

Many governments recognize this, and have put in place clear procurement guidelines for their own use. Government agencies are uniquely positioned to be the first mover to require high standards for trustworthy, and responsible AI, as they do not face the same competitive pressures that private sector companies do. Earlier this month, the US Federal Office of Management and Budget (OMB), released their long awaited guidance on AI procurement by the federal government. The guidelines mirror similar legislation passed by numerous States focusing on AI systems that pose risks to fundamental rights, or to personal safety, and mandating various risk management practices, incident reporting, vendor transparency, and other practices be put in place by both the vendor, and deploying agency.

The federal guidelines complement other work being done by local governments (GovAI Coalition), and by standard setting bodies like the IEEE in their upcoming P3119 standard. The impact of these guidelines won’t be confined just to public sector organizations and their direct vendors however. Vendors are responsible for ensuring that their own AI tools, subcontractors, and sub processors also adhere to certain AI governance standards to ensure the AI supply chain is trustworthy. This compliance trickle down effect is deliberate, and allows for a more market driven approach to responsible AI to complement legislative efforts.

Key Takeaway: Many responsible and ethical AI principles will be enforced when procuring AI tools. Governments and standard setting bodies are now coming out with enforceable requirements for AI procurement that will likely have compliance ‘trickle down' effects.

4. Understanding AI Stakeholders with Trustible’s AI Stakeholder Taxonomy

AI governance frameworks and regulations are moving organizations towards understanding how their AI systems operate in real world conditions. This shift means organizations are more likely to conduct impact assessments, which require a more detailed look at who is being affected by their AI systems.

Understanding and managing the implications of an organization's AI systems requires the identification of crucial groups or individuals, commonly known as "stakeholders." In order to address the complexities around identifying AI stakeholders, Trustible created an AI Stakeholder Taxonomy that can help organizations easily identify stakeholders as part of the impact assessment process for their high-risk use cases, as well as track stakeholders who should be engaged as part of the post-market monitoring. Read our blog post to learn more.

5. AI Researchers wins Nobel Prizes

The Nobel selection committee apparently isn’t immune to the AI hype cycle. Last week, both the Nobel Prize in Physics and Chemistry were awarded to researchers who created foundational AI techniques that have been disruptive in those respective fields. The Physics prize was awarded to John Hopfield and Geoffrey Hinton for their work creating modern Neural Network model architectures using physics, and whose work is now being used prolifically for scientific research. The Chemistry prize was awarded to Demis Hassabis, John Jumper and David Baker who used AI to identify protein folding patterns, an essential technique for researching new potential medical drugs and their potential effects. Hinton previously won the Turing award for his work; an award which ironically has been called the ‘Nobel Prize in Computer Science’.

These awards underscores both how scientific concepts continue to influence the development of AI systems, and the impact those AI systems may then have on scientific fields. While a lot of public focus is often given to the societal risks of AI systems, use of AI for scientific research, and the positive feedback loops it could create, is one of the best cited societal benefits. GenerativeAI systems are actively being used to explore new potential drug therapies, identify astronomical phenomena, and improve medical testing. As with many scientific breakthroughs, including Alfred Nobel’s invention of TNT, there is still the potential for misuse and abuse. Regulators around the world are trying to identify how to create an ecosystem where protein folding techniques like those developed by the Chemistry prize winners are used for societal gain, and not to develop new harmful pathogens.

Our Take: The impact that AI can have on the scientific community is fairly profound, and more ‘natural sciences’ work will likely start to leverage AI in new ways.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team