Will AI make the internet less open?

Plus, how laws define AI and how you can adopt a simple framework for applied AI ethics.

Hi there! Lots of meaty topics in today’s edition of The Trustible Newsletter (5 minute read):

Why AI will lead to more paywalls

How laws and regulations define AI

The FCC cracks down on AI robocalls

Will California lead the way on comprehensive AI Regulations?

A simple, actionable framework for evaluating AI ethics

If you’re in the insurance space (or any regulated enterprise), don’t forget to RSVP for our LinkedIn Live ‘Operationalizing Responsible AI in Insurance’ with key AI governance leaders from Nationwide, Guardian Life, and Prudential.

1. The Closing of the Internet

The internet was built with the intent of being an open way of sharing information, and for the majority of its existence, it was. This openness was incentivized because it allowed bots to scrape websites for content, index it into search engines, which in turn helped drive traffic to the websites being scraped. Recent shifts in business models for many platforms from an ad-driven model, to subscription based model, have started to change that openness and the incentives involved, but the era of AI is putting this change on a fast track.

In recent weeks, we’ve seen a failed licensing deal with the New York Times, a subsequent lawsuit, and an AI training data licensing offering from Reddit reportedly costing $60 million per year. The Verge recently wrote about how one of the basic scraping etiquette rules (obeying robots.txt) on the web has now been broken as the potential value of non-consensual scraping for AI has exploded. Blocking all scraping is both technically difficult, and can lead to websites getting delisted from search results, which is a death sentence for all but the largest companies. However some content can be hidden away behind paywalls or filters thus protecting key IP from being used in AI. This phenomena is leading to a general ‘closing’ of the internet and shifting many of the incentive structures that fueled its rise. We expect this trend to continue as AI companies become hungrier for more original data.

Key Takeaways: Allowing web scraping is no longer as symbiotic for many companies in the age of AI, and most platforms are considering formal data licensing deals for their content/data. This is pushing the internet to be more closed and locked behind subscriptions for just about anything.

2. How do laws & regulations define AI?

If there’s one thing that both data scientists and lawyers can agree on it’s this: there is no universal definition of “AI.”

The race to regulate AI is promulgating an array of approaches from light-touch to highly prescriptive regimes. Governments, industry, and standards-setting bodies are rushing to weigh-in on what guardrails exist for AI developers and deployers. Yet, often times the regulatory conversations take for granted the answer to one fundamental question: What is AI?

Read here to learn more about how the US, EU, Canada, and other bodies such as the EEOC and ISO define artificial intelligence – and what this means for your organization.

3. FCC cracks down on AI robocalls

On February 2, 2024, the Federal Communications Commission (FCC) issued a declaratory ruling, which clarified that robocalls using AI-generated voices are illegal under the Telephone Consumer Protection Act. The announcement came days after robocalls that used AI-voice cloning to sound like US President Joe Biden discouraged New Hampshire voters from voting in the state’s January 23 primary. It also comes amidst the FCC’s recently launched inquiry into the impact of AI technologies on unwanted robocalls and texts.

Our Take: The ruling does little to change the current robocalls rules. In fact, the FCC would be required to launch a notice and comment period prior to adopting new rules. However, the FCC has taken an aggressive stance against unwanted and illegal robocalls and robotexts. This recent ruling may be the tip of the spear when it comes to how the FCC will use its authority to prevent AI from being used by fraudsters to harm consumers.

4. Will California lead the way on comprehensive AI regulations?

California is notorious for being a first mover in the regulatory space, especially on issues related to emerging technologies. California was the first state to act on data breach notifications requirements for consumers, as well as implemented the first comprehensive state privacy law. California may once again be a first mover, this time on AI regulations. On February 7, 2024, State Senator Scott Wiener introduced the Safe and Secure Innovation for Frontier Artificial Intelligence Systems Act (SB 1047), which implements comprehensive rules for AI model developers. The proposed bill attempts to leverage standards from the National Institute of Standards and Technology, as well as other recognized standards-setting bodies, to ensure the safe development and deployment of large-scale AI models. The proposed law would also establish the Frontier Model Division, which would oversee compliance with the law and issue additional regulations, as necessary.

Key Takeaways: While the bill has a long way to go before becoming law, it further highlights the desire to impose regulations throughout the AI ecosystem. In this case, the proposed law would implement a fairly prescriptive regulatory regime on developers of large AI models throughout the models’ lifecycle (i.e., pre-training, pre-deployment, and post-deployment).

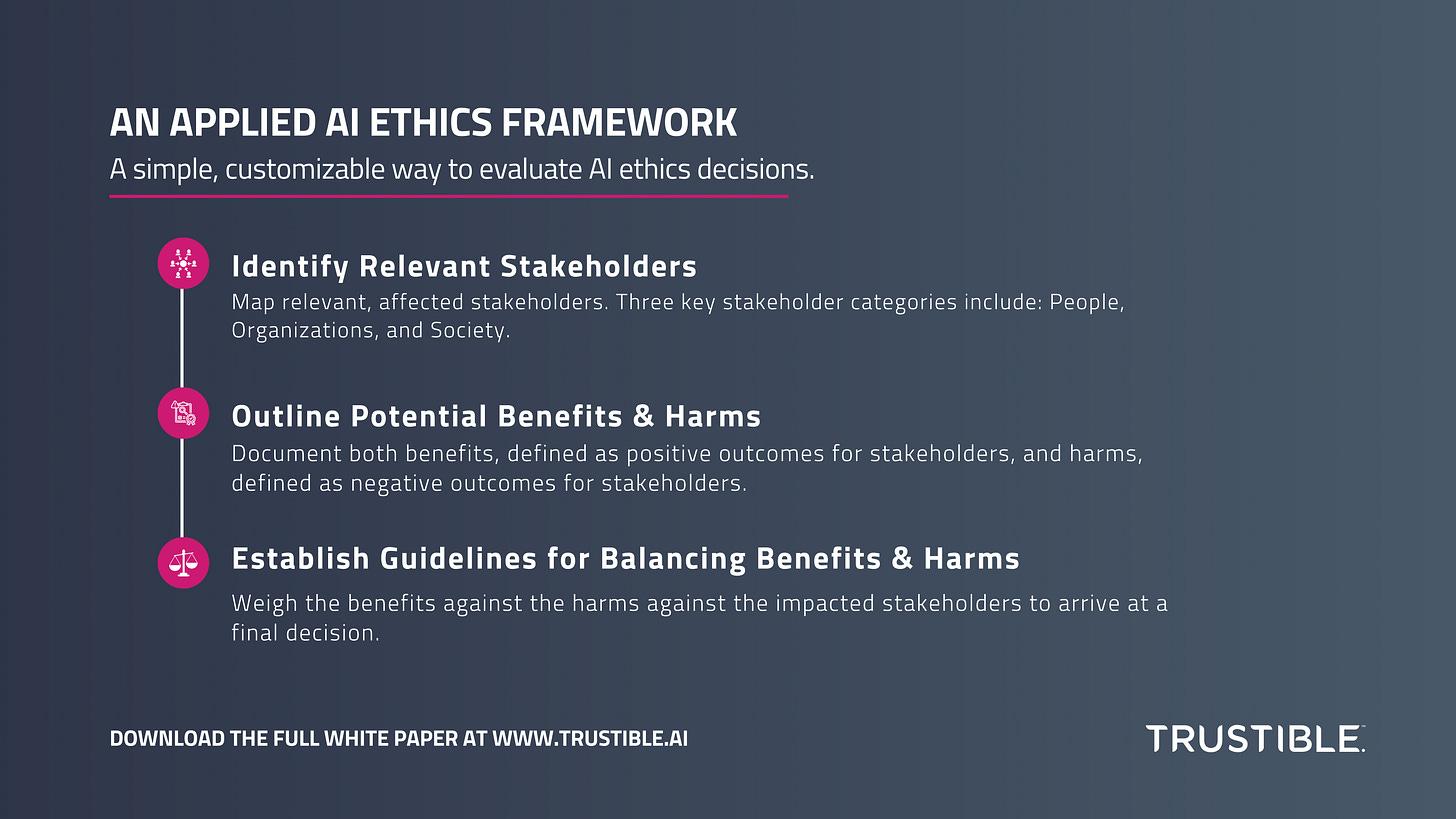

5. A simple, customizable way to evaluate AI ethics decisions

Ethics can be challenging. Many organizations talk about Ethical AI, but many struggle to define it clearly. They often settle on sets of high level principles or values, but then struggle to operationalize those philosophies. This gap between high level principles such as ‘safety’, ‘transparency’, and ‘fairness’ complicates how teams can answer the question: ‘Is it ethical to create/deploy a specific AI product or service?’

Trustible has developed a framework that aims to be a useful tool to help organizations create and translate their principles into actionable rules, identify the impacted stakeholders groups, and help frame key tradeoff decisions about benefits vs harms. We call it an ‘applied ethics’ framework because ML teams are actively facing ethical challenges on a daily basis and need a clear set of principles to ensure they are maximizing benefits and minimizing harms.

Download the full white paper here.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team