AI Chatbots are not ready for kids

Plus the White House urging AI use, insights on AI-enabled computers, deep fakes, a framework to quantify AI Benefits

Hi there!

Don’t forget to register to our webinar tomorrow What to do when you discover AI bias at 12:30pm ET / 9:30am PT. In today’s edition.

AI Chatbots are not ready for kids

White House memo urges increase use of AI in Defense and National Security

AI-controlled computers bring new benefits and risks

A framework for measuring the benefits of AI

The free speech challenges with deep fake laws

1. AI Chatbots are not ready for kids

Character AI, a popular AI platform known for creating ‘high personality’ chatbots, is facing a major crisis after being sued by the family of a recently deceased teen. In their lawsuit, the family of Sewell Setzer alleges that Character AI’s chatbot pushed Sewell to take his own life after engaging in a months-long discussion with one of the AI personalities he created on the platform. This latest tragedy highlights several issues including anthropomorphizing AI systems, incorporating appropriate guardrails, the impacts that AI can have on children, and issues of liability.

Anthropomorphization (i.e. the assignment of human-like characteristics) of AI has been a concern since early dialog systems in the 1970s. While life-like AI systems have some benefits like encouraging creativity, they can exaggerate the capabilities of the systems and encourage undue trust in the AI. Research into psychological aspects of such systems is early, and it is unclear how AI can best support mental health concerns.

In terms of guardrails, Character AI announced a slew of new safety measures including time limits, dedicated models for minors, and removing certain ‘characters’ (AI avatars) that no longer met updated safety guidelines. However, many of these measures were immediately met with backlash from long-time Character AI users whose characters were taken down or no longer responded in the same ways as before. Appropriate guardrails need to balance performance with safety (Meta and Gemini Image Generators creating ahistoric images of black colonials is a similar but different example of the dilemma). The use of AI by minors remains a pressing and understudied issue, especially as social media platforms are installing more restrictions for those under 18.

The lack of research is paired with a lack of clear regulations on life-like chatbots. Even leading regulations like the EU AI Act, which prohibits intentional manipulative systems, don’t have clear safety requirements beyond basic transparency for seemingly ‘fun’ chatbot systems like Character AI.

Key Takeaway: Modern AI chatbots easily pass the ‘Turing Test’, but the effects that can have, especially on minors is unclear – and potentially deadly. Meanwhile, AI companies are trying to balance their own unclear legal and ethical liability challenges while facing backlash from their own users over what should be permitted.

2. White House memo urges increase use of AI in Defense and National Security

At the federal level in the US, the AI policy debate isn’t just about safety vs innovation – it’s also about economic and military competitiveness with other countries such as China. Last week, President Biden sent out a new memo urging AI adoption for national security purposes. This memo, a followup to last year’s executive order, outlines a new AI governance and risk management framework for the national security space, foundation model testing expectations from the AI Safety Institute, and coordination of AI safety research with allied governments. The memo also notably includes several discussion points around attracting AI talent into the US and for public sector work. There was an additional classified Annex to the memo that was not publicly released.

The new national security AI governance framework somewhat mirrors the structure of the EU AI Act as it outlines several prohibited and high risk use cases, and then details various risk management activities that must be implemented for high risk uses. These activities include conducting risk and impact assessments, implementing technical testing processes, and building training materials.

While individual states have the authority to regulate certain commercial AI applications within their borders and the use of AI by state governments, national security is entirely the domain of the federal government. This prioritization reflects a growing emphasis on responsible AI adoption. Similarly, the EU AI Act primarily focuses on commercial product safety and explicitly excludes AI related to national security and military use. As a result, each EU member state is responsible for regulating these specific AI applications within their respective territories.

Key Takeaway: Maintaining a technological advantage in the national security space is a top priority for the US government. The defense sector is looking to rapidly implement clear risk management practices in order to accelerate how fast it can responsibly deploy AI.

3. AI-controlled computers bring new benefits and risks

Anthropic recently announced that the updated Claude Sonnet 3.5 model can automatically use a computer. The model ingests images of the screen and instructions, and outputs directions to move and click the mouse and type text into a virtual keyboard. This application is one of many efforts to automate day-to-day user interactions with computers (Google is currently working on a system that would control the web browser, and Apple Intelligence brings enhanced AI to a broader range of iPhone functionality). This technology can be seen as an extension of AI Agents, discussed in a previous newsletter, but AI-controlled computers amplify existing risks and introduce new ones. The top risks to consider are:

Data Privacy: The Claude model ingests screenshots of the whole screen and can inadvertently consume sensitive data that is not necessary for model functionality (e.g. other tabs in your browser or credit card information). While Anthropic states that user-submitted data is never used to train AI models, the same standards may not apply to future tools. In contrast, Apple Intelligence will do most processing directly on-device and use external “Private Cloud Compute” for complex queries (an approach that does not store data).

Excessive Agency: While this risk is a concern for existing AI Agents, there are still limitations to what systems they can access. A computer-controlling AI System could have unfettered access to a computer and cause external harm or damage the computer itself. As this technology becomes more wide-spread, careful controls will need to be added to what actions can be taken (e.g. Claude will not be able to modify System Preferences).

Indirect Prompt Injection: As Claude (or a similar system) functions by interpreting the text and the images on the computer screen, a bad actor could place bad instructions onto a website that direct the model to do something harmful. Given that these models will interact with a large variety of content on a computer, it will be hard to predict sources of these injections. Controls discussed in excessive agency can mitigate some of these potential harms.

Lack of Education: Current AI Agents require some coding ability and typically go through a technical review process before they are deployed. In contrast, “computer use” AI could be accessed by anyone locally by installing an additional software program. Without proper education, users may be more susceptible to the risks described above.

Despite these potential risks, Anthropic has rated Claude a 2 on their Responsible Scaling Policy, the same grade as previous iterations of the model. Apple Intelligence has limited agency and Google’s new model has not yet been released. However, AI that can control a computer and other general-purpose AI Agents are likely to become more common over the next year.

Key Take-aways: Claude Sonnet-3.5’s ability to interact with and control computers like a human introduces a new range of potential benefits and risks. Some risks surrounding privacy and security are similar to existing AI systems, but the greater accessibility of this technology heightens the need for broader education of AI risks.

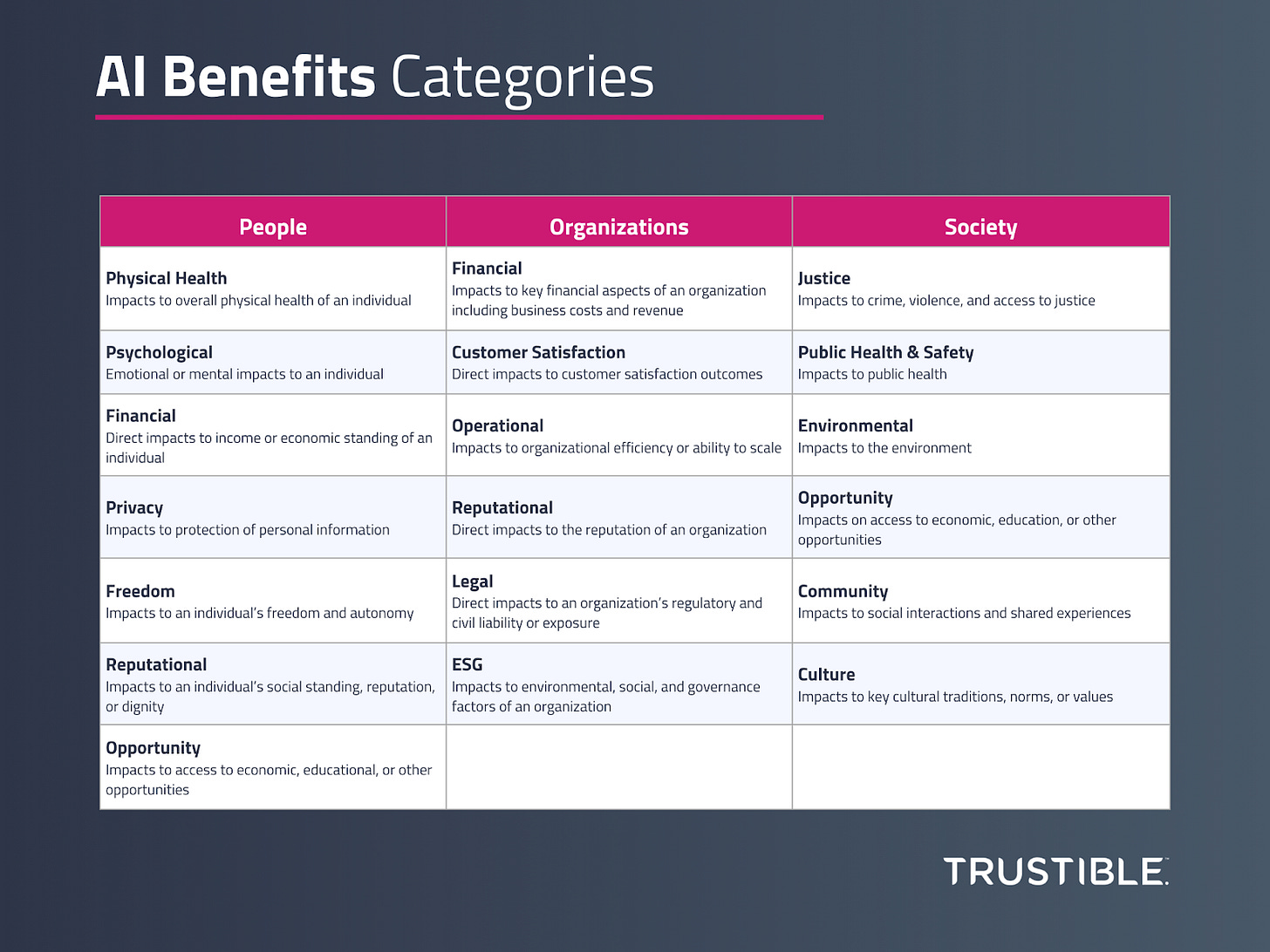

4. A framework for measuring the benefits of AI

In our latest blog, A Framework for Measuring the Benefits of AI, we introduce a structured approach to assessing the tangible and intangible benefits of AI to organizations.

While the investment and research into AI risks are crucial (Trustible is proud to contribute to the US AI Safety Institute Consortium), focusing only on the potential harms is omitting the other side of the equation when it comes to AI ethics: benefits. The basis for many ethical decisions is that the benefits of using AI for a certain task outweigh the risks. However, you can only make that assessment if the potential benefits of an AI system are explicitly identified and quantified.

In this post, we explore practical metrics that capture AI’s real-world benefits, from increased productivity to improved decision-making, and discuss ways to integrate these metrics into strategic planning. Read the full post here.

5. The free speech challenges with deep fake laws

The growing use of generative AI tools has raised concerns over the spread of false or misleading content. Chief among those concerns is misusing generative AI in election-related communications, especially in a year when nearly half the world’s population is voting. In the U.S., federal lawmakers have made little progress in passing legislation related to AI generated election content, whereas states have moved aggressively to enact laws that prevent the spread of false election information. Since 2023, approximately 40 states have introduced bills that address the use of generative AI in political or election-related communications. While not all of the proposed bills were enacted into law, lawmakers in states like California, Colorado, and Minnesota were successful. These laws specifically target the use and impact of deep fakes in election-related communication. California also enacted a law requiring large online platforms to block deceptive content related to California elections with certain exemptions. However, as well intended as these laws may be, a handful of lawsuits show that these laws may raise First Amendment free speech concerns.

The First Amendment generally protects a person’s right to say something, as well as a person's right to not say something. Under certain circumstances, the government can limit a person’s right to speak when its interest to prevent the speech is “narrowly tailored” to achieve that interest (a legal standard known as strict scrutiny). In lawsuits filed against laws in California, Colorado, and Minnesota, the plaintiffs argue that prohibiting their ability to create deep fakes about the election infringes on their right to offer political commentary. The lawsuits raise questions about the value of deep fakes as political speech, given the content and context is generally false or misleading, and whether deep fakes specifically are ripe for regulation versus addressing mis-and dis-information more broadly. Additionally, some of the deep fake laws require disclosures in the content to alert viewers that parts of the content are AI-generated. This raises an issue of compelled speech, meaning that the government is requiring a person to say something (i.e., the disclosure) when they may not otherwise say something.

Our Take: Deep fakes are not generally thought of as adding much value to public discourse and can actually cause more harm than good. However, the complexities of First Amendment law may outweigh the concerns raised by deep fakes. Groups like the American Civil Liberties Union have argued against some of the deep fake laws because of issues over their impact on people’s First Amendment rights. The constitutional questions raised through these state-level legal battles may also give pause to federal lawmakers in their efforts to combat AI generated content like deep fakes.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team