How Will the Next Economic Downturn Impact AI Adoption?

Plus: New Model Ratings, the case for semantic versioning in AI, and Europe’s AI Industry roadmap

In today’s edition (5-6 minute read):

How Will the Next Economic Downturn Impact AI Adoption?

Trustible announces Model Ratings V2

3. AI Needs Semantic Versioning

4. A New Roadmap for Europe’s AI Industry

1. How Will the Next Economic Downturn Impact AI Adoption?

One of the longstanding concerns AI risks is its potential to cause widespread employment disruption. Research, like Anthropic’s Economic Index, shows that AI’s growing perverseness is having a surprising impact on white-collar jobs. Bill Gates also recently made headlines when he predicted that AI will replace a large number of blue and white-collar professions. Yet, a major roadblock to a massive employment realignment is the pace of AI adoption. IBM released a study highlighting AI adoption challenges for organizations this year, which includes lacking the financial or business justification. But as President Trump’s aggressive tariff’s policy threatens a potential recession, organizations may find that an economic downturn may justify and accelerate AI adoption.

Fortune recently highlighted how history serves as a great indicator for how the next recession may accelerate AI adoption. The article notes that the Great Depression led to major technological improvements for certain economic sectors. Similarly, the Great Recession in 2008 helped accelerate cloud adoption and the economic downturn during the COVID-19 pandemic led to greater automation. As organizations begin to feel the potential financial constraints of economic headwinds, it may push them to integrate AI into existing business operations in the face of workforce reductions.

In fact, we are seeing a greater push by the federal government for agencies to adopt more AI tools at the same time as the Trump Administration continues to downsize the federal workforce. The Office of Budget and Management released two memos (M-25-21 and M-25-22) that strongly encourages federal agencies to adopt AI solutions, as well as outlines how agencies should procure AI tools and vendors. Such guidance is pushing agencies to restructure operations given their workforce reduction.

Our Take: Recessions and economic downturns cause widespread hardship, but also realign the economy once the hardship abates. An economic downturn could be the catalyst to accelerate AI adoption, but replacing human jobs with AI comes with its own risks and will underscore the need for robust AI governance procedures.

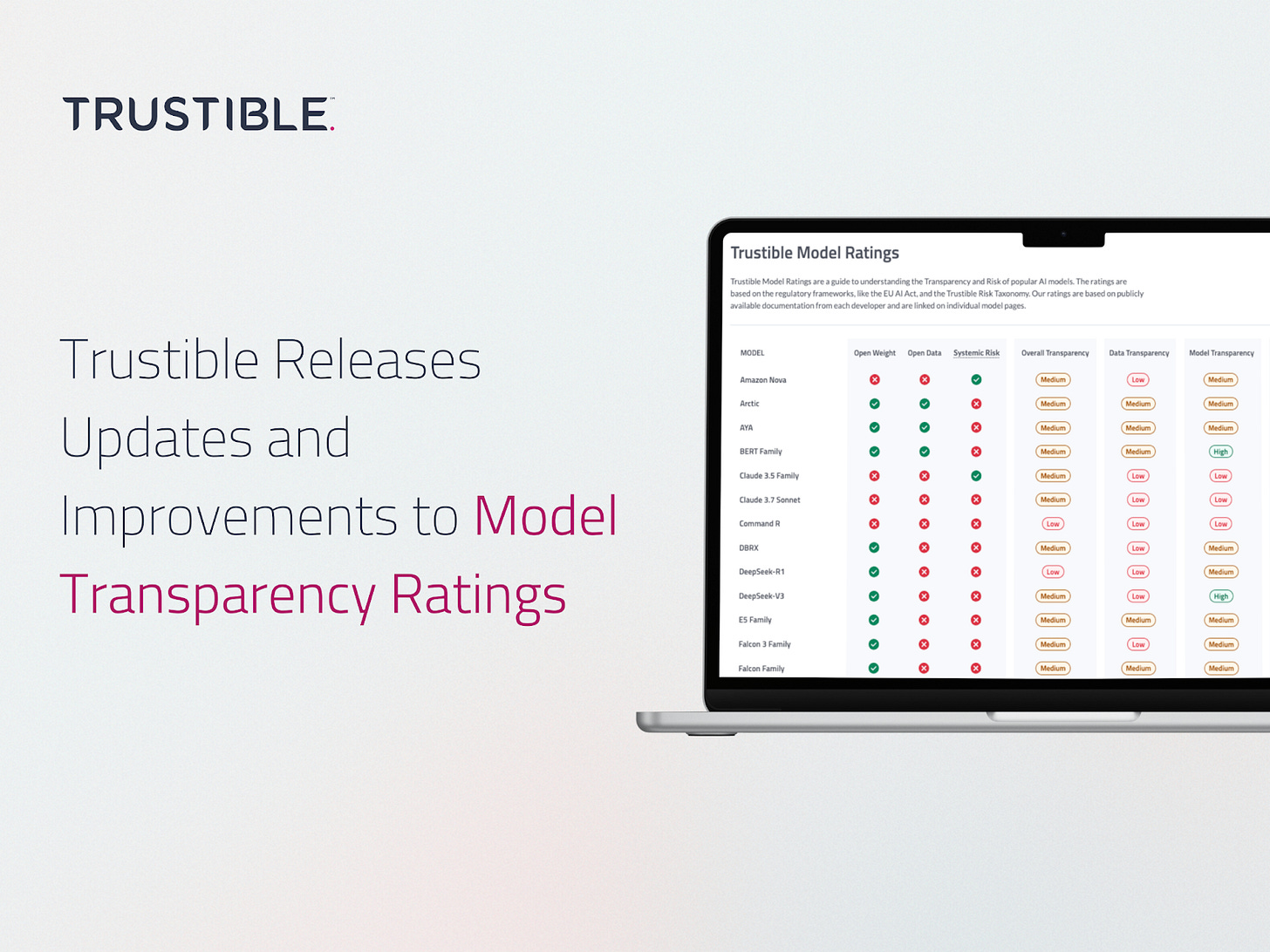

2. Trustible announces Model Ratings V2

Last week, Trustible officially released our Model Ratings V2, which features a comprehensive model transparency assessment for the 39 top LLMs. A key component for building an AI system is choosing a model that is suitable for the task(s) and minimizes risks. However, trying to understand basic information about a model is oftentimes obscure and scattered across many sources (e.g., releases announcements, long system cards, and Github pages). Our Model Ratings updates introduce several key features:

Updates to the Data Section. Our new Data categories test whether the most important aspects of the data preparation process were handled. We also included new categories that evaluate whether the developers discuss specific preprocessing requirements.

Expand Model Evaluations. We expanded the Evaluations section to cover all models, whereas previously we focused on models that had ‘systemic risk’ under the EU AI Act.

New Summary Ratings. We have now created a separate “EU AI Act” Grade that is aligned with the requirements in the Act. We also introduced a rating that aligns with California’s proposed California Training Data Transparency bill.

During our update process, we made several interesting discoveries about the current state of transparency:

Broad Range of Rating. Grok 2 and 3 ranked at the bottom with a 25% overall Rating, while the OLMO models ranked at the top with 80% ratings. For all models, Data Transparency was a point of weakness - nearly a quarter of the models received a rating of 10% or less.

Score Decreases Across Model Generations. This stands in contrast with findings from Stanford’s 2025 AI Index that reported an increase in transparency from 2023 to 2024.

Difficulty Comparing Evaluation Results. While a standard set of benchmarks is used to evaluate most models, evaluations are rarely done using public tools making it difficult to meaningfully compare the results. In our ratings, we highlight the approach to evaluation and to separate marketing language from technical details.

Key Takeaway: Model transparency is a crucial tool for mitigating risks associated with AI systems and is a focus of some legislation. However, our ratings show that transparency is not a priority for most model developers, especially when it comes to the training data. It remains to be seen if consumer or regulatory pressure may change this pattern

3. AI Needs Semantic Versioning

Over the last few weeks, the internet was awash with GPT-4o’s new image generation capabilities that allow users to easily transform existing photos into a different style based on simple prompting. This led to a deluge of Studio Ghibli style images, and more recently, a trend of people turning their dogs into humans. While there are obvious copyright and ethical issues with these trends, one that Studio Ghibli’s creator would have particular issues with, those have been already discussed thoroughly in the media. Instead, we want to highlight another kind of issue from these new capabilities: Why is the model still just called GPT-4o despite such radical new capabilities that create intense new legal risks for OpenAI and its users? Also, OpenAI’s release pattern for GPT-4x models has been: GPT-4 (March 2023), GPT-4o (May 2024), GPT-4.5 (Feb 2025), then GPT-4.1 (April 2025), which is confusing to say the least. OpenAI is not planning on releasing a System Card for the newly announced GPT-4.1, claiming that it isn’t a ‘frontier model’. While the ‘safety’ aspects are only required for ‘frontier’ models under the EU AI Act, all other transparency requirements for GPAI models still apply.

In software engineering, most platforms use ‘semantic versioning’ (SemVer) to indicate to the system’s users what kinds of changes are present. The most typical forms (Major.Minor.Patch) help indicate if there are any ‘breaking’ changes in the system’s APIs or functionality (changing the ‘major’ version) which is essential for groups integrating with it. GPT-4o’s new capabilities were announced in a product-marketing release without any more technical details or reports, and it’s still just ‘GPT-4o’, not ‘GPT-4o.2’ or ‘GPT-4o.7.2a’ or something similar. Deploying these models on Cloud systems like AzureAI still don’t use any form of SemVer, but instead are currently using a release date as the tag (ex: GPT-4o on Azure is listed as gpt-4o (2024-11-20) with only minimal bullet points about the differences between deployment versions).

Integrating with 3rd party models already came with both benefits and risks. While some groups will like the constant improvements, systems that require consistency and robustness need to control when and how they upgrade to a new version, and what the updates are between versions. An enterprise may have greenlit ChatGPT previously, but not have approved new models that may expose them to risks such as new copyright infringement issues. Critical systems leveraging AI will not be built until there are stronger guarantees that they simply won’t stop working due to a system change.

Key Takeaway: The internet, and especially the Cloud, was built on robust APIs that provided consistent functionality, and proper systems for documenting and notifying about breaking changes. AI needs to develop its own standards for this in order to mature enough to be used reliably for critical systems.

4. A New Roadmap for Europe’s AI Industry

The European Union (EU) is continuing its efforts to change the narrative around their AI policy approach. On April 8, 2025 the European Commission (EC) announced its “AI Continent Action Plan,” which sets out an ambitious roadmap to bolster the EU’s AI industry. The EC’s latest move aligns with a broader push to pivot away from an over-regaulted AI stance to a more innovative and robust European AI ecosystem.

The Action Plan highlights key areas for growth, which includes building large-scale AI infrastructure, increasing access to high-quality data, accelerating AI adoption, improving AI talent pipelines, and simplifying AI regulations (i.e., the EU AI Act). An interesting note on regulatory simplification is the degree to which the EC will seek to amend the EU AI Act, especially given some interest to do so from industry and member states (i.e., Germany and France). The EC is seeking public input on AI infrastructure and improving AI adoption, as well as a forthcoming public consultation on accessing high-quality data.

The EC’s Action Plan offers some contrast with America's approach. The EC’s Plan specifically seeks to encourage EU-made models, which conflicts with the Trump Administration's broader goals for prompting US-made AI. The Trump administration has touted target initiatives to fortify America’s AI infrastructure and Nvidia recently announced a $500 billion investment in US-made AI servers, which the White House claims happened because of President Trump’s tariffs. We also expect an emphasis on US-made AI in the U.S. federal government’s AI Action, which is expected to be released on or about July 25, 2025. The EC’s Plan’s intentions to develop European AI models, as well as its notable lack of language about international cooperation, further underscores the rippling effects of the Trump Administration's isolationist policies.

Our Take: A robust secondary AI ecosystem in the EU provides a strong counterbalance to concerns with China’s tech influence. However, a bifurcated western AI market in which Europe can write rules for EU-specific AI technology can present new AI governance challenges and disrupt efforts to harmonize AI standards.

—

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team