The message is clear: innovation is the top AI policy priority for the US

Plus, lots of OpenAI news, insights on AI Governance Committees, and LLMs in scientific research.

Hi there! There’s been so much news around AI developments over the past 2 weeks that we won’t be able to do a perfect job at summarizing everything that happened, but here’s our view on the things that matter. 6 minute reading time.

AI Expo sets the stage for innovation as the top AI policy priority

OpenAI has had a busy news cycle

Enhancing the effectiveness of AI Governance Committees

Surprise surprise: LLMs are being used in scientific writing

The patchwork of state AI regulations can negatively impact innovation

1. AI Expo sets the stage for innovation as the top AI policy priority

While the EU is weeks away from officially enacting a monumental regulation for AI, US based policymakers have set a very different agenda. Last week, The Special Competitive Studies Project (SCSP), a think tank funded by former Google CEO Eric Schimdt, hosted a massive AI Expo last week in Washington DC focused on maintaining the US’s headstart and global dominance of AI. The expo brought together key government leaders, especially those in the defense and intelligence sector, and industry leaders with the goal of discussing policy solutions to maintain US competitiveness in the technology space. Microsoft took the event as an opportunity to announce their air-gapped AI product exclusively for the intelligence sector. Senator Schumer (D-NY) discussed a roadmap of AI legislation, released on the morning of May 15, which was heavily focused on AI innovation, and national competitiveness.

Both the AI Expo, and Senate AI roadmap made one thing very clear: winning the ‘AI Arms Race’ is DC’s number 1 AI priority. While many policymakers are worried about the risks of AI, those concerns often fall second place to fears of falling behind technologically. China faces several challenges in AI, including poor access to AI chips, the inability to attract international AI talent, and a sluggish economy. But the Chinese government has already resourced AI development as a top priority. There are efforts kicking off this week to create dialog between both superpowers to try and agree on certain ground rules, much in the spirit of weapon control treaties the US established with the USSR during the cold war, but it’s unclear what the result of those dialogs may be yet.

Our Take: In contrast to the EU, the priorities in DC are, in order, 1) ensure the US stays ahead of China on AI, and 2) protect society from the harms of AI. Any legislation to emerge from DC in the next year will likely reflect this order of priorities.

2. OpenAI has had a busy news cycle

OpenAI, the undisputed Generative AI leader, had a series of major announcements in the past week, each of which could be the focus of a section, but here’s the TL;DR on each:

Open AI Launches GPT-4o

OpenAI’s new model is a highly capable multimodal AI that can take in several different content formats as inputs, and generate multitude content formats as outputs. GPT-4o videos showed impressive capabilities for understanding voice, and generating images with clear text embedded in them, although the model also seems to have a strong ‘personality’ (or ‘AI-ality?’). Many of GPT-4o’s capabilities look very similar to Project Astra, an ‘AI Agent’ app announced this week at Google I/O, albeit to much less fanfare.

Our Take: Multimodal models will likely become more commonplace, but they introduce even more potential risks, and there’s very little research into safety implications for them.

OpenAI joins Steering Committee for the Coalition for Content Provenance and Authenticity (C2PA)

C2PA is leading efforts to set content provenance standards, including standards for both synthetic content watermarking, and authenticity standards for human generated content. C2PA supports several initiatives, including the Adobe led image Content Credentials project, the Microsoft sponsored Project Origin, and potentially may soon include Google’s new standard for watermarking generated text content.

Our Take: Both content watermarking and authenticity certificates can be strong tools to ensure a future where most generated content is traceable and authentic content can be verified. Model creators may have an incentive to watermark content to ensure a steady stream of high quality, authentic, training data.

OpenAI publishes ‘Model Spec’

Model Spec is a new proposed documentation standard for describing expected behavior for general purpose AI systems. In particular, OpenAI’s Model Spec tries to identify highly nuanced user interactions, and documents what the intended behavior of the model should be. Foundational models are often fine-tuned using a technique known as Reinforcement Learning from Human Feedback (RLHF), and this model spec is essentially documenting what that feedback looks like. This standard can be leveraged by other model developers to share with end users what the expected safety behaviors of the model are.

Our Take: The specification is helpful, but will also probably be used by adversarial actors to figure out exactly how to circumvent the documented safety features. We expect not all model creators to agree with OpenAI’s choices on what the model should and shouldn’t do.

Executive Departures

Both Chief Scientist, Ilya Suskever, and head of AI super alignment team, Jan Leike, announced their departures from OpenAI this week. Neither individual made statements about why they were departing, and did not directly link their departures to any recent initiatives by OpenAI.

Our Take: Big executive moves, and the secrecy around them, has made investors, regulators, and the public anxious about who is in control of the world’s most powerful AI systems.

3. Enhancing the effectiveness of AI Governance Committees

Many organizations have created cross-functional AI governance committees responsible for strategy, governance, and oversight of their AI systems. But any sort of committee comes with challenges of its own, including how to build accountability, get buy-in for the committee's efforts, and ensure that everyone is equally informed.

Trustible recently did a deep dive into best practices for AI Governance committees, and how our platform can help accelerate, standardize, and scale their efforts. In it, we discuss the top priorities of AI governance committees, why they must act now, and how the features and capabilities of the Trustible platform fit into their operational and strategic priorities.

Download our published white paper here.

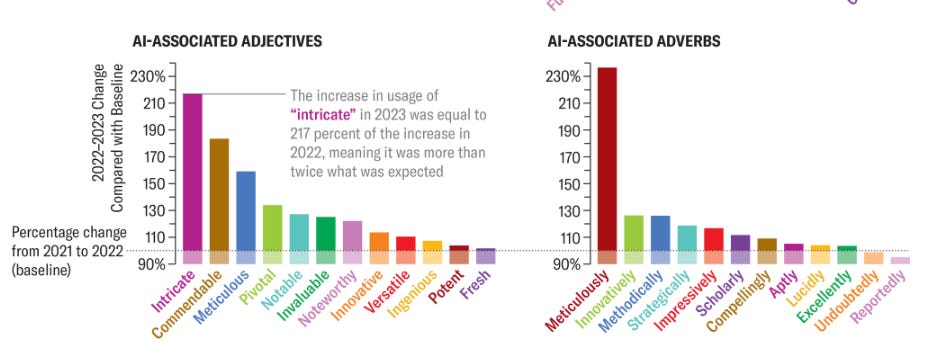

4. Surprise surprise: LLMs are being used in scientific writing

A recent study showed that LLMs (primarily Chat-GPT) are being used to write some or all parts of at least 1% scientific articles published over the last year. The researchers came to this conclusion by conducting an analysis of over 140 million papers published in 2023 and discovering a disproportionate uptick in usage of words like “meticulous” and “commendable” that are often associated with AI-made texts. While LLMs can empower researchers for whom English is not a primary language, they can, also, cite non-existent papers and produce other types of hallucinations (i.e. factual errors). If erroneous facts get published, future researchers may cite it as fact, perpetuating the incorrect information. The study did not analyze the dataset for hallucinated citations, but fake citations have been caught in legal briefings in the last year. While peer-review is a required process for many academic publications, reviewers often will not check every source or be able to rerun experiments, and thus they will not be able to catch these types of errors. A separate study suggests that LLMs are used to aid peer review which could reduce the likelihood that such errors get caught.

Our Takeaway: LLMs can aid in the scientific process, but better education is required about their limitations.

5. The patchwork of state AI regulations can negatively impact innovation

In early 2023, Congress teased the idea that it wanted to take action on AI regulations. It began when Senator Chuck Schumer (D-NY) announced a series of forums to help educate Senators on how best to tackle the increasingly pervasive technology. As discussions continued to occur in Washington, state legislatures appeared to take a wait-and-see approach, as evidenced by the types of legislation enacted in 2023. By and large, states sought to enact narrower, sector-specific AI regulations. However, that approach has seemingly changed direction this year.

Over the past few months, several states are seriously considering a more wholesale approach to AI regulations. Colorado and Connecticut have proposed comprehensive private sector AI rules (SB24-205 and SB 2, respectively). The Connecticut bill was ultimately killed when Governor Ned Lamont threatened to veto it. Colorado Governor Jared Polis has not signaled whether he will sign his state’s bill. Meanwhile in California, state legislators are moving a comprehensive AI bill (AB-2930) through the committee process. Each of these proposed bills take a similar risk-based approach to AI regulation as the EU AI Act, but are far less prescriptive.

This recent and serious push may signal that states have a bearish outlook on Congress's ability to pass meaningful AI rules, especially as the November election approaches. While the recently introduced, bi-partisan American Privacy Rights Act includes several provisions regulating AI, there is reason to be skeptical that it will pass. Moreover, Senate Schumer’s recently released bipartisan AI policy roadmap communicates that federal AI legislation will be a piecemeal, committee-driven process. If state lawmakers were looking for reassurances that Congress would take a coordinated and comprehensive approach, then this does not appear to assuage lingering doubts.

Our Take: The sweeping societal impacts of AI suggest this issue is not best served by the emerging patchwork approach. It could lead to a range of AI laws that could do more harm than good when it comes to encouraging innovation, as well as create yet another multi-state “check the box” compliance exercise rather than instill a national culture of responsible AI by design.

*********

As always, we welcome your feedback on content and how to improve this newsletter!

AI Responsibly,

- Trustible team